Compare commits

50 Commits

9da4a2232c

...

main

| Author | SHA1 | Date | |

|---|---|---|---|

|

|

6a66f8439d | ||

|

|

38045f9ba6 | ||

|

|

6309e91272 | ||

|

|

807dd55bac | ||

|

|

2ec7d35342 | ||

|

|

4c45779312 | ||

|

|

92f2f4f6d2 | ||

|

|

5ec342cbc4 | ||

|

|

f090dc18ba | ||

|

|

7c2cc2628b | ||

|

|

c6ef23d54b | ||

| 5715d17312 | |||

|

|

51b4abf66e | ||

|

|

907a5d6be6 | ||

|

|

f96c594a8c | ||

|

|

ee0441a40d | ||

|

|

cfeeb29340 | ||

|

|

327cb7ccfc | ||

|

|

6cfea75681 | ||

|

|

8718396f57 | ||

|

|

aa4854df14 | ||

|

|

e172464058 | ||

|

|

50185fb534 | ||

|

|

f488a5319c | ||

|

|

4f25763489 | ||

|

|

ddc2972bd2 | ||

|

|

ca5ae17177 | ||

|

|

fe169c00be | ||

|

|

84b8797992 | ||

|

|

f3b772b83b | ||

|

|

b168c95b54 | ||

|

|

f5eac67aff | ||

|

|

48ad6e6be9 | ||

|

|

79da26a21d | ||

|

|

a8580b87f7 | ||

|

|

09d302c309 | ||

|

|

1549496248 | ||

|

|

8c24252cb2 | ||

|

|

44a6bb3fbf | ||

|

|

109c9735a5 | ||

|

|

81ce833ebe | ||

| 197c60615d | |||

|

|

aabb86b6f3 | ||

|

|

174f01058d | ||

|

|

19cbcd8e53 | ||

|

|

982bd91c3b | ||

| a9747b3071 | |||

| 5b86be18b8 | |||

| 70e5370eb4 | |||

| 24f2829222 |

@@ -1,8 +0,0 @@

|

||||

# This configuration file was automatically generated by Gitpod.

|

||||

# Please adjust to your needs (see https://www.gitpod.io/docs/config-gitpod-file)

|

||||

# and commit this file to your remote git repository to share the goodness with others.

|

||||

|

||||

tasks:

|

||||

- init: npm install

|

||||

|

||||

|

||||

@@ -1,98 +1,24 @@

|

||||

---

|

||||

layout: article

|

||||

title: Admin Services

|

||||

description: Data Controller contains a number of admin-only web services, such as DB Export, Lineage Generation, and Data Catalog refresh.

|

||||

description: The Administrator Screen provides useful system information and buttons for various administrator actions

|

||||

og_title: Administrator Screen

|

||||

og_image: /img/admininfo.png

|

||||

---

|

||||

|

||||

# Admin Services

|

||||

## Administrator Screen

|

||||

|

||||

Several web services have been defined to provide additional functionality outside of the user interface. These somewhat-hidden services must be called directly, using a web browser.

|

||||

The admin screen (under user profile / System) displays a number of useful system parameters as well as several buttons for executing administrator specific actions

|

||||

|

||||

In a future version, these features will be made available from an Admin screen (so, no need to manually modify URLs).

|

||||

|

||||

|

||||

The URL is made up of several components:

|

||||

Button info as follows:

|

||||

|

||||

* `SERVERURL` -> the domain (and port) on which your SAS server resides

|

||||

* `EXECUTOR` -> Either `SASStoredProcess` for SAS 9, else `SASJobExecution` for Viya

|

||||

* `APPLOC` -> The root folder location in which the Data Controller backend services were deployed

|

||||

* `SERVICE` -> The actual Data Controller service being described. May include additional parameters.

|

||||

|Button|Description|

|

||||

|---|---|

|

||||

|Refresh Data Catalog|Update Data Catalog for ALL libraries. More info [here](/dcu-datacatalog).|

|

||||

|Download Configuration|This downloads a zip file containing the current database configuration - useful for migrating to a different data controller database instance.|

|

||||

|Update Licence Key| Link to the screen for providing a new Data Controller licence key|

|

||||

|Export DC Library DDL|COMING SOON!! <br>Exports the data controller control library in DB specific DDL (eg SAS, PGSQL, TSQL) and allows an optional schema name to be included|

|

||||

|

||||

To illustrate the above, consider the following URL:

|

||||

|

||||

[https://viya.4gl.io/SASJobExecution/?_program=/Public/app/viya/services/admin/exportdb&flavour=PGSQL](https://viya.4gl.io/SASJobExecution/?_program=/Public/app/viya/services/admin/exportdb&flavour=PGSQL

|

||||

)

|

||||

|

||||

This is broken down into:

|

||||

|

||||

* `$SERVERURL` = `https://sas.analytium.co.uk`

|

||||

* `$EXECUTOR` = `SASJobExecution`

|

||||

* `$APPLOC` = `/Public/app/dc`

|

||||

* `$SERVICE` = `services/admin/exportdb&flavour=PGSQL`

|

||||

|

||||

The below sections will only describe the `$SERVICE` component - you may construct this into a URL as follows:

|

||||

|

||||

* `$SERVERURL/$EXECUTOR?_program=$APPLOC/$SERVICE`

|

||||

|

||||

## Export Config

|

||||

|

||||

This service will provide a zip file containing the current database configuration. This is useful for migrating to a different data controller database instance.

|

||||

|

||||

EXAMPLE:

|

||||

|

||||

* `services/admin/exportconfig`

|

||||

|

||||

## Export Database

|

||||

Exports the data controller control library in DB specific DDL. The following URL parameters may be added:

|

||||

|

||||

* `&flavour=` (only PGSQL supported at this time)

|

||||

* `&schema=` (optional, if target schema is needed)

|

||||

|

||||

EXAMPLES:

|

||||

|

||||

* `services/admin/exportdb&flavour=PGSQL&schema=DC`

|

||||

* `services/admin/exportdb&flavour=PGSQL`

|

||||

|

||||

## Refresh Data Catalog

|

||||

|

||||

In any SAS estate, it's unlikely the size & shape of data will remain static. By running a regular Catalog Scan, you can track changes such as:

|

||||

|

||||

- Library Properties (size, schema, path, number of tables)

|

||||

- Table Properties (size, number of columns, primary keys)

|

||||

- Variable Properties (presence in a primary key, constraints, position in the dataset)

|

||||

|

||||

The data is stored with SCD2 so you can actually **track changes to your model over time**! Curious when that new column appeared? Just check the history in [MPE_DATACATALOG_TABS](/tables/mpe_datacatalog_tabs).

|

||||

|

||||

To run the refresh process, just trigger the stored process, eg below:

|

||||

|

||||

* `services/admin/refreshcatalog`

|

||||

* `services/admin/refreshcatalog&libref=MYLIB`

|

||||

|

||||

The optional `&libref=` parameter allows you to run the process for a single library. Just provide the libref.

|

||||

|

||||

When doing a full scan, the following LIBREFS are ignored:

|

||||

|

||||

* 'CASUSER'

|

||||

* 'MAPSGFK'

|

||||

* 'SASUSER'

|

||||

* 'SASWORK

|

||||

* 'STPSAMP'

|

||||

* 'TEMP'

|

||||

* `WORK'

|

||||

|

||||

Additional LIBREFs can be excluded by adding them to the `DCXXXX.MPE_CONFIG` table (where `var_scope='DC_CATALOG' and var_name='DC_IGNORELIBS'`). Use a pipe (`|`) symbol to seperate them. This can be useful where there are connection issues for a particular library.

|

||||

|

||||

Be aware that the scan process can take a long time if you have a lot of tables!

|

||||

|

||||

Output tables (all SCD2):

|

||||

|

||||

* [MPE_DATACATALOG_LIBS](/tables/mpe_datacatalog_libs) - Library attributes

|

||||

* [MPE_DATACATALOG_TABS](/tables/mpe_datacatalog_tabs) - Table attributes

|

||||

* [MPE_DATACATALOG_VARS](/tables/mpe_datacatalog_vars) - Column attributes

|

||||

* [MPE_DATASTATUS_LIBS](/tables/mpe_datastatus_libs) - Frequently changing library attributes (such as size & number of tables)

|

||||

* [MPE_DATASTATUS_TABS](/tables/mpe_datastatus_tabs) - Frequently changing table attributes (such as size & number of rows)

|

||||

|

||||

## Update Licence Key

|

||||

|

||||

Whenever navigating Data Controller, there is always a hash (`#`) in the URL. To access the licence key screen, remove all content to the RIGHT of the hash and add the following string: `/licensing/update`.

|

||||

|

||||

If you are using https protocol, you will have 2 keys (licence key / activation key). In http mode, there is just one key (licence key) for both boxes.

|

||||

|

||||

@@ -40,7 +40,7 @@ The Editor screen lets users who have been pre-authorised (via the `DATACTRL.MPE

|

||||

|

||||

1 - *Filter*. The user can filter before proceeding to perform edits.

|

||||

|

||||

2 - *Upload*. If you have a lot of data, you can [upload it directly](dcu-fileupload). The changes are then approved in the usual way.

|

||||

2 - *Upload*. If you have a lot of data, you can [upload it directly](files). The changes are then approved in the usual way.

|

||||

|

||||

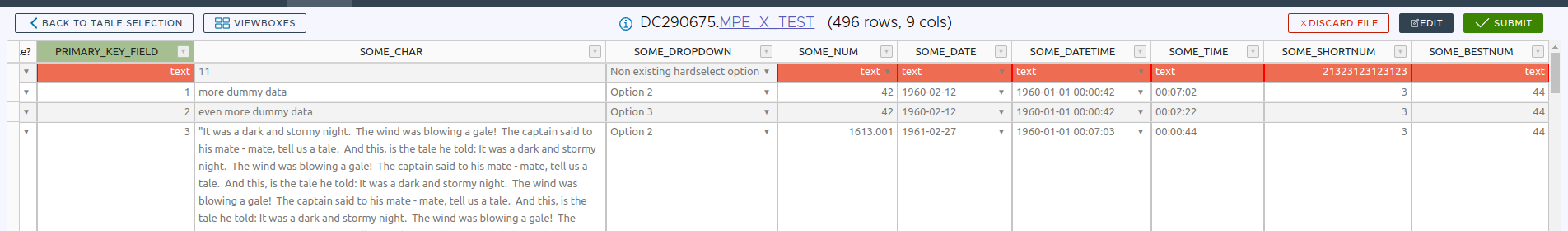

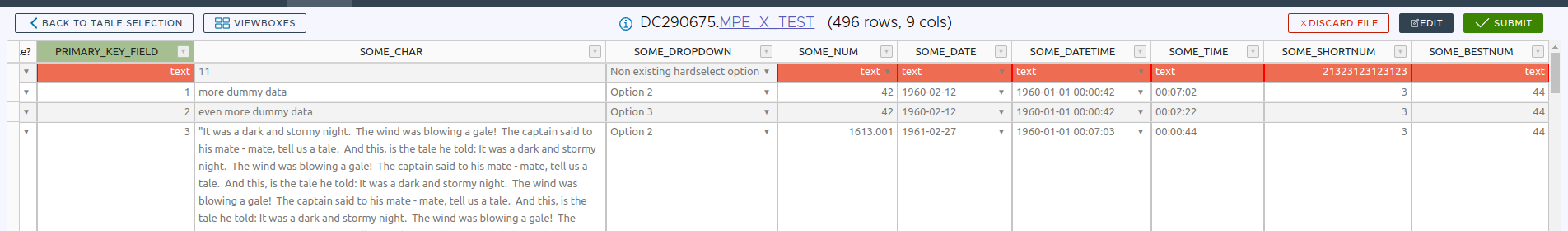

3 - *Edit*. This is the main interface, data is displayed in tabular format. The first column is always "Delete?", as this allows you to mark rows for deletion. Note that removing a row from display does not mark it for deletion! It simply means that this row is not part of the changeset being submitted.

|

||||

The next set of columns are the Primary Key, and are shaded grey. If the table has a surrogate / retained key, then it is the Business Key that is shown here (the RK field is calculated / updated at the backend). For SCD2 type tables, the 'validity' fields are not shown. It is assumed that the user is always working with the current version of the data, and the view is filtered as such.

|

||||

|

||||

@@ -2,7 +2,8 @@

|

||||

|

||||

## Overview

|

||||

|

||||

Dates & datetimes are actually stored as plain numerics in regular SAS tables. In order for the Data Controller to recognise these values as dates / datetimes a format must be applied.

|

||||

Dates & datetimes are stored as plain numerics in regular SAS tables. In order for the Data Controller to recognise these values as dates / datetimes a format must be applied.

|

||||

|

||||

|

||||

|

||||

|

||||

@@ -14,10 +15,12 @@ Supported date formats:

|

||||

* YYMMDD.

|

||||

* E8601DA.

|

||||

* B8601DA.

|

||||

* NLDATE.

|

||||

|

||||

Supported datetime formats:

|

||||

|

||||

* DATETIME.

|

||||

* NLDATM.

|

||||

|

||||

Supported time formats:

|

||||

|

||||

@@ -34,7 +37,10 @@ proc metalib;

|

||||

run;

|

||||

```

|

||||

|

||||

If you have other dates / datetimes / times you would like us to support, do [get in touch](http://datacontroller.io/contact)!

|

||||

!!! note

|

||||

Data Controller does not support decimals when EDITING. For datetimes, this means that values must be rounded to 1 second (milliseconds are not supported).

|

||||

|

||||

If you have other dates / datetimes / times you would like us to support, do [get in touch](https://datacontroller.io/contact)!

|

||||

|

||||

|

||||

|

||||

|

||||

@@ -14,4 +14,11 @@ By default, Data Controller will work with the SAS Groups defined in Viya, Metad

|

||||

|

||||

## Data Controller Admin Group

|

||||

|

||||

When configuring Data Controller for the first time, a group is designated as the 'admin' group. This group has unrestricted access to Data Controller. To change this group, modify the `%let dc_admin_group=` entry in the settings program. To prevent others from changing this group, ensure the containing folder is write-protected!

|

||||

When configuring Data Controller for the first time, a group is designated as the 'admin' group. This group has unrestricted access to Data Controller. To change this group, modify the `%let dc_admin_group=` entry in the settings program, located as follows:

|

||||

|

||||

* **SAS Viya:** $(appLoc)/services/settings.sas

|

||||

* **SAS 9:** $(appLoc)/services/public/Data_Controller_Settings

|

||||

* **SASjs Server:** $(appLoc)/services/public/settings.sas

|

||||

|

||||

|

||||

To prevent others from changing this group, ensure the Data Controller appLoc (deployment folder) is write-protected - eg RM (metadata) or using Viya Authorisation rules.

|

||||

|

||||

@@ -27,6 +27,9 @@ By default, a maximum of 100 observations can be edited in the browser at one ti

|

||||

* Number (and size) of columns

|

||||

* Speed of client machine (laptop/desktop)

|

||||

|

||||

### DC_REQUEST_LOGS

|

||||

On SASjs Server and SAS9 Server types, at the end of each DC SAS request, a record is added to the [MPE_REQUESTS](/tables/mpe_requests) table. In some situations this can cause table locks. To prevent this issue from occuring, the `DC_REQUEST_LOGS` option can be set to `NO` (Default is `YES`).

|

||||

|

||||

### DC_RESTRICT_EDITRECORD

|

||||

Setting YES will prevent the EDIT RECORD dialog appearing in the EDIT screen by removing the "Edit Row" option in the right click menu, and the "ADD RECORD" button in the bottom left.

|

||||

|

||||

|

||||

@@ -37,8 +37,8 @@ This is a required field.

|

||||

|

||||

The loadtype determines the nature of the update to be applied. Valid values are as follows:

|

||||

|

||||

- UPDATE. This is the most basic type - simply provide the primary key fields in the `BUSKEY` column.

|

||||

- FORMAT_CAT. For updating Format Catalogs, the BUSKEY should be `FMTNAME START`. See [formats](/formats).

|

||||

- UPDATE. This is the most basic type, and any updates will happen 'in place'. Simply provide the primary key fields in the `BUSKEY` column.

|

||||

- TXTEMPORAL. This signifies an SCD2 type load. For this type the validity fields (valid from, valid to) should be specified in the `VAR_TXFROM` and `VAR_TXTO` fields. The table itself should include `VAR_TXFROM` in the physical key. The remainder of the primary key fields (not including `VAR_TXFROM`) should be specified in `BUSKEY`.

|

||||

- BITEMPORAL. These tables have two time dimensions - a version history, and a business history. The version history (SCD2) fields should be specified in `VAR_TXFROM` and `VAR_TXTO` and the business history fields should be specified in `VAR_BUSFROM` and `VAR_BUSTO`. Both the `VAR_TXFROM` and `VAR_BUSFROM` fields should be in the physical key of the actual table, but should NOT be specified in the `BUSKEY` field.

|

||||

- REPLACE. This loadtype simply deletes all the rows and appends the staged data. Changes are NOT added to the audit table. In the diff screen, previous rows are displayed as deleted, and staged rows as new (modified values are not displayed). Can be useful for updating single-row tables.

|

||||

@@ -48,6 +48,9 @@ This is a required field.

|

||||

!!! Note

|

||||

The support for BITEMPORAL loads is restricted, in the sense it is only possible to load data at a single point in time (no support for loading _multiple_ business date ranges for a _specific_ BUSKEY). The workaround is simply to load each date range separately. As a result of this restriction, the EDIT page will only show the latest business date range for each key. To modify earlier values, a filter should be applied.

|

||||

|

||||

!!! Warning

|

||||

If your target table contains referential constraints (eg primary key values that are linked to a child table with a corresponding foreign key) then this will cause problems with the UPDATE and REPLACE load types. This is due to the fact these both involve delete operations. If removal of these constraints is not an option, the workaround would be to create a separate (mirror) table, and update that using PRE-EDIT and POST-APPROVE hook scripts. Please contact Data Controller support for advice / assistance.

|

||||

|

||||

### BUSKEY

|

||||

|

||||

The business (natural) key of the table. For SCD2 / Bitemporal, this does NOT include the validity dates. For Retained / Surrogate key tables, this contains the actual surrogate key - the underlying fields that are used to create the surrogate key are specified in [RK_UNDERLYING](#rk_underlying).

|

||||

@@ -95,8 +98,8 @@ Leave blank if not required.

|

||||

|

||||

SAS Developer Notes:

|

||||

|

||||

* Target dataset: `work.STAGING_DS`

|

||||

* Base libref.table or catalog variable: `&orig_libds`

|

||||

* Staged dataset: `work.STAGING_DS`

|

||||

* Target libref.table or catalog variable: `&orig_libds`

|

||||

|

||||

If your DQ check means that the program should not be submitted, then simply exit with `&syscc > 4`. You can even set a message to go back to the user by using the [mp_abort](https://core.sasjs.io/mp__abort_8sas.html) macro:

|

||||

|

||||

@@ -119,8 +122,8 @@ Leave blank if not required.

|

||||

|

||||

SAS Developer Notes:

|

||||

|

||||

* Target dataset: `work.STAGING_DS`

|

||||

* Base libref.table or catalog variable: `&orig_libds`

|

||||

* Staged dataset: `work.STAGING_DS`

|

||||

* Target libref.table or catalog variable: `&orig_libds`

|

||||

|

||||

### POST_APPROVE_HOOK

|

||||

|

||||

@@ -185,7 +188,7 @@ Leave blank unless using retained / surrogate keys.

|

||||

|

||||

### AUDIT_LIBDS

|

||||

|

||||

If this field is blank (ie empty, missing), **every** change is captured in the [MPE_AUDIT](/tables/mpe_audit.md). This can result in large data volumes for frequently changing tables.

|

||||

If this field is blank (ie empty, missing), **every** change is captured in the [MPE_AUDIT](/tables/mpe_audit). This can result in large data volumes for frequently changing tables.

|

||||

|

||||

Alternative options are:

|

||||

|

||||

@@ -216,3 +219,6 @@ To illustrate:

|

||||

|

||||

* Physical filesystem (ends in .sas): `/opt/sas/code/myprogram.sas`

|

||||

* Logical filesystem: `/Shared Data/stored_processes/mydatavalidator`

|

||||

|

||||

!!! warning

|

||||

Do not place your hook scripts inside the Data Controller (logical) application folder, as they may be inadvertently lost during a deployment (eg in the case of a backup-and-deploy-new-instance approach).

|

||||

|

||||

@@ -22,15 +22,12 @@ The Stored Processes are deployed using a SAS Program. This should be executed

|

||||

|

||||

```sas

|

||||

%let appLoc=/Shared Data/apps/DataController; /* CHANGE THIS!! */

|

||||

filename dc url "https://git.datacontroller.io/dc/dc/releases/download/vX.X.X/sas9.sas; /* use correct release */

|

||||

%let serverName=SASApp;

|

||||

filename dc url "https://git.datacontroller.io/dc/dc/releases/download/latest/sas9.sas";

|

||||

%inc dc;

|

||||

```

|

||||

|

||||

If you don't have internet access from SAS, download `sas9.sas` from [here](https://git.datacontroller.io/dc/dc/releases), and change the `compiled_apploc` on line 2:

|

||||

|

||||

|

||||

|

||||

You can also change the `serverName` here, which is necessary if you are using any other logical server than `SASApp`.

|

||||

If you don't have internet access from SAS, download `sas9.sas` from [here](https://git.datacontroller.io/dc/dc/releases), and change the initial `compiled_apploc` and `compiled_serverName` macro variable assignments as necessary.

|

||||

|

||||

#### 2 - Deploy the Frontend

|

||||

|

||||

@@ -39,10 +36,18 @@ The Data Controller frontend comes pre-built, and ready to deploy to the root of

|

||||

Deploy as follows:

|

||||

|

||||

1. Download the `frontend.zip` file from: [https://git.datacontroller.io/dc/dc/releases](https://git.datacontroller.io/dc/dc/releases)

|

||||

2. Unzip and place in the [htdocs folder of your SAS Web Server](https://sasjs.io/frontend-deployment/#sas9-deploy) - typically `!SASCONFIG/LevX/Web/WebServer/htdocs`.

|

||||

3. Open the `index.html` file and update the values for `appLoc` (per SAS code above) and `serverType` (to `SAS9`).

|

||||

2. Unzip and place in the [htdocs folder of your SAS Web Server](https://sasjs.io/frontend-deployment/#sas9-deploy) - typically a subdirectory of: `!SASCONFIG/LevX/Web/WebServer/htdocs`.

|

||||

3. Open the `index.html` file and update the values in the `<sasjs>` tag as follows:

|

||||

|

||||

You can now open the app at `https://YOURWEBSERVER/unzippedfoldername` and follow the configuration steps (DC Physical Location and Admin Group) to complete deployment.

|

||||

* `appLoc` - same as per SAS code in the section above

|

||||

* `serverType` - change this to`SAS9`

|

||||

* `serverUrl` - Provide only if your SAS Mid Tier is on a different domain than the web server (protocol://SASMIDTIERSERVER:port)

|

||||

* `loginMechanism` - set to `Redirected` if using SSO or 2FA

|

||||

* `debug` - set to `true` to debug issues on startup (otherwise it's faster to leave it off and turn on in the application itself when needed)

|

||||

|

||||

The remaining properties are not relevant for a SAS 9 deployment and can be **safely ignored**.

|

||||

|

||||

You can now open the app at `https://YOURWEBSERVER/unzippedfoldername` (step 2 above) and follow the configuration steps (DC Physical Location and Admin Group) to complete deployment.

|

||||

|

||||

#### 3 - Run the Configurator

|

||||

|

||||

@@ -146,9 +151,36 @@ The items deployed to metadata include:

|

||||

|

||||

We strongly recommend that the Data Controller configuration tables are stored in a database for concurrency reasons.

|

||||

|

||||

We have customers in production using Oracle, Postgres, Netezza, SQL Server to name a few. Contact us for support with DDL and migration steps for your chosen vendor.

|

||||

We have customers in production using Oracle, Postgres, Netezza, Redshift and SQL Server to name a few. Contact us for support with DDL and migration steps for your chosen vendor.

|

||||

|

||||

!!! note

|

||||

Data Controller does NOT modify schemas! It will not create or drop tables, or add/modify columns or attributes. Only data _values_ (not the model) can be modified using this tool.

|

||||

|

||||

To caveat the above - it is also quite common for customers to use a BASE engine library. Data Controller ships with mechananisms to handle locking (internally) but it cannot handle external contentions, such as those caused when end users open datasets directly, eg with Enterprise Guide or Base SAS.

|

||||

|

||||

|

||||

## Redeployment

|

||||

|

||||

The full redeployment process is as follows:

|

||||

|

||||

* Back up metadata (export DC folder as SPK file)

|

||||

* Back up the physical tables in the DC library

|

||||

* Do a full deploy of a brand new instance of DC

|

||||

- To a new metadata folder

|

||||

- To a new frontend folder (if full deploy)

|

||||

* _Delete_ the **new** DC library (metadata + physical tables)

|

||||

* _Move_ the **old** DC library (metadata only) to the new DC metadata folder. You will need to use DI Studio to do this (you can't move folders using SAS Management Console)

|

||||

* Copy the _content_ of the old `services/public/Data_Controller_Settings` STP to the new one

|

||||

- This will link the new DC instance to the old DC library / logs directory

|

||||

- It will also re-apply any site-specific DC mods

|

||||

* Run any/all DB migrations between the old and new DC version

|

||||

- See [migrations](https://git.datacontroller.io/dc/dc/src/branch/main/sas/sasjs/db/migrations) folder

|

||||

- Update the metadata of the SAS Library, using DI Studio, to capture the model changes

|

||||

* Test and make sure the new instance works as expected

|

||||

* Delete (or rename) the **old** instance

|

||||

- Metadata + frontend, NOT the underlying DC library data

|

||||

* Rename the new instance so it is the same as the old

|

||||

- Both frontend and metadata

|

||||

* Run a smoke test to be sure everything works!

|

||||

|

||||

If you are unfamiliar with, or unsure about, the above steps - don't hesitate to contact the Data Controller team for assistance and support.

|

||||

@@ -97,3 +97,9 @@ This can happen when importing with Data Integration Studio and your user profil

|

||||

This can happen if you enter the wrong `serverName` when deploying the SAS program on an EBI platform. Make sure it matches an existing Stored Process Server Context.

|

||||

|

||||

The error may also be thrown due to an encoding issue - changing to a UTF-8 server has helped at least one customer.

|

||||

|

||||

## Determining Application Version

|

||||

|

||||

The app version is bundled into the frontend during the release, and is visible by clicking your username in the top right.

|

||||

|

||||

You can also determine the app version (and SASjs Version, and build time) by opening browser Development Tools and running `appinfo()` in the console.

|

||||

@@ -1,7 +1,25 @@

|

||||

# Data Controller for SAS: Data Catalog

|

||||

Data Controller collects information about the size and shape of the tables and columns. The Catalog does not contain information about the data content (values).

|

||||

---

|

||||

layout: article

|

||||

title: DC Data Catalog

|

||||

description: Catalog the Libraries, Tables, Columns, SAS Catalogs, and associated Objects in your SAS estate

|

||||

og_title: DC Data Catalog Documentation

|

||||

og_image: /img/catalog.png

|

||||

---

|

||||

|

||||

The catalog is based primarily on the existing SAS dictionary tables, augmented with attributes such as primary key fields, filesize / libsize, and number of observations (eg for database tables).

|

||||

# DC Data Catalog

|

||||

|

||||

In any SAS estate, it's unlikely the size & shape of data will remain static. By running a regular Catalog Scan, you can track changes such as:

|

||||

|

||||

- Library Properties (size, schema, path, number of tables)

|

||||

- Table Properties (size, number of columns, primary keys)

|

||||

- Variable Properties (presence in a primary key, constraints, position in the dataset)

|

||||

- SAS Catalog Properties (number of entries, created / modified datetimes)

|

||||

- SAS Catalog Object properties (entry name, type, description, created / modified datetimes)

|

||||

|

||||

The data is stored with SCD2 so you can actually **track changes to your model over time**! Curious when that new column appeared? Just check the history in [MPE_DATACATALOG_TABS](/tables/mpe_datacatalog_tabs).

|

||||

|

||||

|

||||

The Catalog does **not** contain information about the data content (values). It is based primarily on the existing SAS dictionary tables, augmented with attributes such as primary key fields, filesize / libsize, and number of observations (eg for database tables).

|

||||

|

||||

Frequently changing data (such as nobs, size) are stored on the MPE_DATASTATUS_XXX tables. The rest is stored on the MPE_DATACATALOG_XXX tables.

|

||||

|

||||

@@ -20,6 +38,10 @@ Table attributes are split between those that change infrequently (eg PK_FIELDS)

|

||||

|

||||

Variable attributes come from dictionary tables with an extra PK indicator. A PK is identified by the fact the variable is within an index that is both UNIQUE and NOTNULL. Variable names are always uppercase.

|

||||

|

||||

### Catalogs & Objects

|

||||

|

||||

This info comes from the dictionary.catalogs table. The catalog created / modified time is considered to be the earliest created time / latest modified time of the underlying objects.

|

||||

|

||||

## Assumptions

|

||||

|

||||

The following assumptions are made:

|

||||

@@ -31,3 +53,37 @@ The following assumptions are made:

|

||||

If you have duplicate librefs, specific table security setups, or sensitive models - contact us.

|

||||

|

||||

|

||||

## Refreshing the Data Catalog

|

||||

|

||||

The update process for INDIVIDUAL libraries can be run by any user, and is performed in the VIEW menu by expanding a library definition and clicking the refresh icon next to the library name.

|

||||

|

||||

|

||||

|

||||

Members of the admin group may run the refresh process for ALL libraries by clicking the REFRESH button on the System page.

|

||||

|

||||

When doing a full scan, the following LIBREFS are ignored:

|

||||

|

||||

* 'CASUSER'

|

||||

* 'MAPSGFK'

|

||||

* 'SASUSER'

|

||||

* 'SASWORK

|

||||

* 'STPSAMP'

|

||||

* 'TEMP'

|

||||

* `WORK'

|

||||

|

||||

Additional LIBREFs can be excluded by adding them to the `DCXXXX.MPE_CONFIG` table (where `var_scope='DC_CATALOG' and var_name='DC_IGNORELIBS'`). Use a pipe (`|`) symbol to seperate them. This can be useful where there are connection issues for a particular library.

|

||||

|

||||

Be aware that the scan process can take a long time if you have a lot of tables!

|

||||

|

||||

|

||||

Output tables (all SCD2):

|

||||

|

||||

* [MPE_DATACATALOG_CATS](/tables/mpe_datacatalog_cats) - SAS Catalog list

|

||||

* [MPE_DATACATALOG_LIBS](/tables/mpe_datacatalog_libs) - Library attributes

|

||||

* [MPE_DATACATALOG_OBJS](/tables/mpe_datacatalog_objs) - SAS Catalog object attributes

|

||||

* [MPE_DATACATALOG_TABS](/tables/mpe_datacatalog_tabs) - Table attributes

|

||||

* [MPE_DATACATALOG_VARS](/tables/mpe_datacatalog_vars) - Column attributes

|

||||

* [MPE_DATASTATUS_CATS](/tables/mpe_datastatus_cats) - Frequently changing catalog attributes (such as created / modified datetimes and number of entries)

|

||||

* [MPE_DATASTATUS_LIBS](/tables/mpe_datastatus_libs) - Frequently changing library attributes (such as size & number of tables)

|

||||

* [MPE_DATASTATUS_OBJS](/tables/mpe_datastatus_objs) - Frequently changing catalog object attributes (such as created / modified datetimes and library concatenation level)

|

||||

* [MPE_DATASTATUS_TABS](/tables/mpe_datastatus_tabs) - Frequently changing table attributes (such as size & number of rows)

|

||||

|

||||

@@ -1,68 +0,0 @@

|

||||

# Data Controller for SAS: File Uploads

|

||||

|

||||

Files can be uploaded via the Editor interface - first choose the library and table, then click "Upload". All versions of excel are supported.

|

||||

|

||||

Uploaded data may *optionally* contain a column named `_____DELETE__THIS__RECORD_____` - where this contains the value "Yes" the row is marked for deletion.

|

||||

|

||||

If loading very large files (eg over 10mb) it is more efficient to use CSV format, as this bypasses the local rendering engine, but also the local DQ checks - so be careful! Examples of local (excel) but not remote (CSV) file checks include:

|

||||

|

||||

* Length of character variables - CSV files are truncated at the max target column length

|

||||

* Length of numeric variables - if the target numeric variable is below 8 bytes then the staged CSV value may be rounded if it is too large to fit

|

||||

* NOTNULL - this rule is only applied at backend when the constraint is physical (rather than a DC setting)

|

||||

* MINVAL

|

||||

* MAXVAL

|

||||

* CASE

|

||||

|

||||

Note that the HARDSELECT_*** hooks are not applied to the rendered Excel values (they are currently only applied when editing a cell).

|

||||

|

||||

|

||||

|

||||

|

||||

## Excel Uploads

|

||||

|

||||

Thanks to our pro license of [sheetJS](https://sheetjs.com/), we can support all versions of excel, large workbooks, and extract data extremely fast. We also support the ingest of [password-protected workbooks](/videos#uploading-a-password-protected-excel-file).

|

||||

|

||||

The rules for data extraction are:

|

||||

|

||||

* Scan the spreadsheet until a row is found with all the target columns (not case sensitive)

|

||||

* Extract data below until the *first row containing a blank primary key value*

|

||||

|

||||

This is incredibly flexible, and means:

|

||||

|

||||

* data can be anywhere, on any worksheet

|

||||

* data can contain additional columns (they are just ignored)

|

||||

* data can be completely surrounded by other data

|

||||

|

||||

A copy of the original Excel file is also uploaded to the staging area. This means that a complete audit trail can be captured, right back to the original source data.

|

||||

|

||||

!!! note

|

||||

If the excel contains more than one range with the target columns (eg, on different sheets), only the FIRST will be extracted.

|

||||

|

||||

## CSV Uploads

|

||||

|

||||

The following should be considered when uploading data in this way:

|

||||

|

||||

- A header row (with variable names) is required

|

||||

- Variable names must match those in the target table (not case sensitive). An easy way to ensure this is to download the data from Viewer and use this as a template.

|

||||

- Duplicate variable names are not permitted

|

||||

- Missing columns are not permitted

|

||||

- Additional columns are ignored

|

||||

- The order of variables does not matter EXCEPT for the (optional) `_____DELETE__THIS__RECORD_____` variable. When using this variable, it must be the **first**.

|

||||

- The delimiter is extracted from the header row - so for `var1;var2;var3` the delimeter would be assumed to be a semicolon

|

||||

- The above assumes the delimiter is the first special character! So `var,1;var2;var3` would fail

|

||||

- The following characters should **not** be used as delimiters

|

||||

- doublequote

|

||||

- quote

|

||||

- space

|

||||

- underscore

|

||||

|

||||

When loading dates, be aware that Data Controller makes use of the `ANYDTDTE` and `ANYDTDTTME` informats (width 19).

|

||||

This means that uploaded date / datetime values should be unambiguous (eg `01FEB1942` vs `01/02/42`), to avoid confusion - as the latter could be interpreted as `02JAN2042` depending on your locale and options `YEARCUTOFF` settings. Note that UTC dates with offset values (eg `2018-12-26T09:19:25.123+0100`) are not currently supported. If this is a feature you would like to see, contact us.

|

||||

|

||||

!!! tip

|

||||

To get a copy of a file in the right format for upload, use the [file download](/dc-userguide/#usage) feature in the Viewer tab

|

||||

|

||||

!!! warning

|

||||

Lengths are taken from the target table. If a CSV contains long strings (eg `"ABCDE"` for a $3 variable) then the rest will be silently truncated (only `"ABC"` staged and loaded). If the target variable is a short numeric (eg 4., or 4 bytes) then floats or large integers may be rounded. This issue does not apply to excel uploads, which are first validated in the browser.

|

||||

|

||||

|

||||

@@ -9,7 +9,7 @@ og_image: https://docs.datacontroller.io/img/dci_deploymentdiagramviya.png

|

||||

|

||||

## Overview

|

||||

Data Controller for SAS Viya consists of a frontend, a set of Job Execution Services, a staging area, a Compute Context, and a database library. The library can be a SAS Base engine if desired, however this can cause contention (eg table locks) if end users are able to connect to the datasets directly, eg via Enterprise Guide or Base SAS.

|

||||

A database that supports concurrent access is highly recommended.

|

||||

A database that supports concurrent access is recommended.

|

||||

|

||||

## Prerequisites

|

||||

|

||||

@@ -37,29 +37,28 @@ The below areas of the SAS Viya platform are modified when deploying Data Contro

|

||||

|

||||

<img src="/img/dci_deploymentdiagramviya.svg" height="350" style="border:3px solid black" >

|

||||

|

||||

!!! note

|

||||

The "streaming" version of Viya uses the files API for web content, so there is no need for the web server component.

|

||||

|

||||

|

||||

## Deployment

|

||||

Data Controller deployment is split between 3 deployment types:

|

||||

Data Controller deployment is split between 2 deployment types:

|

||||

|

||||

* Demo version

|

||||

* Full Version (manual deploy)

|

||||

* Full Version (automated deploy)

|

||||

* Streaming (web content served from SAS Drive)

|

||||

* Full (web content served from dedicated web server)

|

||||

|

||||

<!--

|

||||

## Full Version - Manual Deploy

|

||||

-->

|

||||

|

||||

There are several parts to this proces:

|

||||

|

||||

1. Create the Compute Context

|

||||

2. Deploy Frontend

|

||||

4. Prepare the database and update settings (optional)

|

||||

5. Update the Compute Context autoexec

|

||||

2. Deploy Frontend (Full deploy only)

|

||||

3. Deploy Services

|

||||

4. First Run Configuration

|

||||

5. Prepare the database and update settings (optional)

|

||||

6. Update the Compute Context autoexec

|

||||

|

||||

|

||||

### Create Compute Context

|

||||

|

||||

The Viya Compute context is used to spawn the Job Execution Services - such that those services may run under the specified system account, with a particular autoexec.

|

||||

### 1. Create Shared Compute Context

|

||||

|

||||

We strongly recommend a dedicated compute context for running Data Controller. The setup requires an Administrator account.

|

||||

|

||||

@@ -74,21 +73,60 @@ We strongly recommend a dedicated compute context for running Data Controller.

|

||||

|

||||

!!! note

|

||||

XCMD is NOT required to use Data Controller.

|

||||

### Deploy frontend

|

||||

|

||||

### 2. Deploy Frontend (Full Deploy only)

|

||||

|

||||

Unzip the frontend into your chosen directory (eg `/var/www/html/DataController`) on the SAS Web Server. Open `index.html` and update the following inside `dcAdapterSettings`:

|

||||

|

||||

- `appLoc` - this should point to the root folder on SAS Drive where you would like the Job Execution services to be created. This folder should initially, NOT exist (if it is found, the backend will not be deployed)

|

||||

- `contextName` - here you should put the name of the compute context you created in the previous step.

|

||||

- `dcPath` - the physical location on the filesystem to be used for staged data. This is only used at deployment time, it can be configured later in `$(appLoc)/services/settings.sas` or in the autoexec if used.

|

||||

- `adminGroup` - the name of an existing group, which should have unrestricted access to Data Controller. This is only used at deployment time, it can be configured later in `$(appLoc)/services/settings.sas` or in the autoexec if used.

|

||||

- `servertype` - should be SASVIYA

|

||||

- `debug` - can stay as `false` for performance, but could be switched to `true` for debugging startup issues

|

||||

- `useComputeApi` - use `true` for best performance.

|

||||

|

||||

|

||||

|

||||

Now, open https://YOURSERVER/DataController (using whichever subfolder you deployed to above) using an account that has the SAS privileges to write to the `appLoc` location.

|

||||

### 3. Deploy Services

|

||||

|

||||

Services are deployed by running a SAS program.

|

||||

|

||||

#### Deploy Services: Streaming Deploy

|

||||

|

||||

Run the following in SAS Studio:

|

||||

|

||||

```sas

|

||||

%let apploc=/Public/DataController; /* desired SAS Drive location */

|

||||

filename dc url "https://git.datacontroller.io/dc/dc/releases/download/latest/viya.sas";

|

||||

%inc dc;

|

||||

```

|

||||

|

||||

#### Deploy Services: Full Deploy

|

||||

|

||||

Run the following in SAS Studio:

|

||||

|

||||

```sas

|

||||

%let apploc=/Public/DataController; /* Per configuration in Step 2 above */

|

||||

filename dc url "https://git.datacontroller.io/dc/dc/releases/download/latest/viya_noweb.sas";

|

||||

%inc dc;

|

||||

```

|

||||

|

||||

### 4. First Run Configuration

|

||||

|

||||

Now the services are deployed (including the service which creates the staging area) we can open the Data Controller web interface and make the necessary configurations:

|

||||

|

||||

#### First Run Configuration: Streaming Deploy

|

||||

|

||||

At the end of the SAS log from Step 3, there will be a link (`YOURSAS.SERVER/SASJobExecution?_file=/YOUR/APPLOC/services/DC.html`). Open this to perform the configuration - such as:

|

||||

|

||||

* dcpath - physical path for depleyment

|

||||

* Admin Group - will have full access to DC

|

||||

* Compute Context - should be a shared compute for a multi-user deployment

|

||||

|

||||

|

||||

|

||||

#### First Run Configuration: Full Deploy

|

||||

|

||||

Open https://YOURSERVER/DataController (using whichever subfolder you deployed to above) using an account that has the SAS privileges to write to the `appLoc` location.

|

||||

|

||||

You will be presented with a deployment screen like the one below. Be sure to check the "Recreate Database" option and then click the "Deploy" button.

|

||||

|

||||

@@ -96,9 +134,8 @@ You will be presented with a deployment screen like the one below. Be sure to c

|

||||

|

||||

Your services are deployed! And the app is operational, albeit still a little sluggish, as every single request is using the APIs to fetch the content of the `$(appLoc)/services/settings.sas` file.

|

||||

|

||||

To improve responsiveness by another 700ms we recommend you follow the steps in [Update Compute Context Autoexec](/dci-deploysasviya/#update-compute-context-autoexec) below.

|

||||

|

||||

### Deploy Database

|

||||

### 5. Deploy Database

|

||||

If you have a lot of users, such that concurrency (locked datasets) becomes an issue, you might consider migrating the control library to a database.

|

||||

|

||||

The first part to this is generating the DDL (and inserts). For this, use the DDL exporter as described [here](/admin-services/#export-database). If you need a flavour of DDL that is not yet supported, [contact us](https://datacontroller.io/contact/).

|

||||

@@ -107,7 +144,9 @@ Step 2 is simply to run this DDL in your preferred database.

|

||||

|

||||

Step 3 is to update the library definition in the `$(appLoc)/services/settings.sas` file using SAS Studio.

|

||||

|

||||

### Update Compute Context Autoexec

|

||||

### 6.Update Compute Context Autoexec

|

||||

|

||||

To improve responsiveness by another 700ms we recommend you follow these steps:

|

||||

|

||||

First, open the `$(appLoc)/services/settings.sas` file in SAS Studio, and copy the code.

|

||||

|

||||

@@ -132,54 +171,3 @@ To explain each of these lines:

|

||||

* The final libname statement can also be configured to point at a database instead of a BASE engine directory (contact us for DDL)

|

||||

|

||||

If you have additional libraries that you would like to use in Data Controller, they should also be defined here.

|

||||

<!--

|

||||

## Full Version - Automated Deploy

|

||||

|

||||

The automated deploy makes use of the SASjs CLI to create the dependent context and job execution services. In addition to the standard prerequisites (a registered viya system account and a prepared database) you will also need:

|

||||

|

||||

* a local copy of the [SASjs CLI](https://sasjs.io/sasjs-cli/#installation)

|

||||

* a Client / Secret - with an administrator group in SCOPE, and an authorization_code GRANT_TYPE. The SASjs [Viya Token Generator](https://github.com/sasjs/viyatoken) may help with this.

|

||||

|

||||

### Prepare the Target and Token

|

||||

|

||||

To configure this part (one time, manual step), we need to run a single command:

|

||||

```

|

||||

sasjs add

|

||||

```

|

||||

A sequence of command line prompts will follow for defining the target. These prompts are described [here](https://sasjs.io/sasjs-cli-add/). Note that `appLoc` is the SAS Drive location in which the Data Controller jobs will be deployed.

|

||||

|

||||

### Prepare the Context JSON

|

||||

This file describes the context that the CI/CD process will generate. Save this file, eg as `myContext.json`.

|

||||

|

||||

```

|

||||

{

|

||||

"name": "DataControllerContext",

|

||||

"attributes": {

|

||||

"reuseServerProcesses": true,

|

||||

"runServerAs": "mycasaccount"

|

||||

},

|

||||

"environment": {

|

||||

"autoExecLines": [

|

||||

"%let DC_LIBREF=DCDBVIYA;",

|

||||

"%let DC_ADMIN_GROUP={{YOUR DC ADMIN GROUP}};",

|

||||

"%let DC_STAGING_AREA={{YOUR DEDICATED FILE SYSTEM DRIVE}};",

|

||||

"libname &dc_libref {{YOUR DC DATABASE}};",

|

||||

],

|

||||

"options": []

|

||||

},

|

||||

"launchContext": {

|

||||

"contextName": "SAS Job Execution launcher context"

|

||||

},

|

||||

"launchType": "service",

|

||||

}

|

||||

```

|

||||

|

||||

### Prepare Deployment Script

|

||||

|

||||

The deployment script will run on a build server (or local desktop) and execute as follows:

|

||||

|

||||

```

|

||||

# Create the SAS Viya Target

|

||||

sasjs context create --source myContext.json --target myTarget

|

||||

```

|

||||

-->

|

||||

106

docs/excel.md

@@ -1,16 +1,64 @@

|

||||

---

|

||||

layout: article

|

||||

title: Excel

|

||||

description: Load Excel to SAS whilst retaining your favourite formulas! Data can be on any sheet, on any cell, even surrounded by other data. All versions of Excel supported.

|

||||

description: Data Controller can extract all manner of data from within an Excel file (including formulae) ready for ingestion into SAS. All versions of excel are supported.

|

||||

og_image: https://docs.datacontroller.io/img/excel_results.png

|

||||

---

|

||||

|

||||

# Excel Uploads

|

||||

|

||||

Data Controller for SAS® supports all versions of excel. Data is extracted from excel from within the browser - there is no need for additional SAS components. So long as the column names match those in the target table, the data can be on any worksheet, start from any row, and any column.

|

||||

The data can be completely surrounded by irrelevant data - the extraction will stop as soon as it hits one empty cell in a primary key column. The columns can be in any order, and are not case sensitive. More details [here](/dcu-fileupload/#excel-uploads).

|

||||

Data Controller supports two approaches for importing Excel data into SAS:

|

||||

|

||||

## Formulas

|

||||

- Simple - source range in tabular format, with column names/values that match the target Table. No configuration necessary.

|

||||

- Complex - data is scattered across multiple ranges in a dynamic (non-fixed) arrangement. Pre-configuration necessary.

|

||||

|

||||

|

||||

Thanks to our pro license of [sheetJS](https://sheetjs.com/), we can support all versions of excel, large workbooks, and fast extracts. We also support the ingest of [password-protected workbooks](/videos#uploading-a-password-protected-excel-file).

|

||||

|

||||

|

||||

Note that data is extracted from excel from _within the browser_ - meaning there is no need for any special SAS modules / products.

|

||||

|

||||

A copy of the original Excel file is also uploaded to the staging area. This means that a complete audit trail can be captured, right back to the original source data.

|

||||

|

||||

## Simple Excel Uploads

|

||||

|

||||

To make a _simple_ extract, select LOAD / Tables / (library/table) and click "UPLOAD" (or drag the file onto the page). No configuration necessary.

|

||||

|

||||

|

||||

|

||||

The rules for data extraction are:

|

||||

|

||||

* Scan the each sheet until a row is found with all target columns

|

||||

* Extract rows until the first *blank primary key value*

|

||||

|

||||

This is incredibly flexible, and means:

|

||||

|

||||

* data can be anywhere, on any worksheet

|

||||

* data can start on any row, and any column

|

||||

* data can be completely surrounded by other data

|

||||

* columns can be in any order

|

||||

* additional columns are simply ignored

|

||||

|

||||

|

||||

!!! note

|

||||

If the excel contains more than one range with the target columns (eg, on different sheets), only the FIRST will be extracted.

|

||||

|

||||

Uploaded data may *optionally* contain a column named `_____DELETE__THIS__RECORD_____` - if this contains the value "Yes", the row is marked for deletion.

|

||||

|

||||

If loading very large files (eg over 10mb) it is more efficient to use CSV format, as this bypasses the local rendering engine, but also the local DQ checks - so be careful! Examples of local (excel) but not remote (CSV) file checks include:

|

||||

|

||||

* Length of character variables - CSV files are truncated at the max target column length

|

||||

* Length of numeric variables - if the target numeric variable is below 8 bytes then the staged CSV value may be rounded if it is too large to fit

|

||||

* NOTNULL - this rule is only applied at backend when the constraint is physical (rather than a DC setting)

|

||||

* MINVAL

|

||||

* MAXVAL

|

||||

* CASE

|

||||

|

||||

Note that the HARDSELECT_*** hooks are not applied to the rendered Excel values (they are only applied when actively editing a cell).

|

||||

|

||||

|

||||

|

||||

### Formulas

|

||||

|

||||

It is possible to configure certain columns to be extracted as formulae, rather than raw values. The target column must be character, and it should be wide enough to support the longest formula in the source data. If the order of values is important, you should include a row number in your primary key.

|

||||

|

||||

@@ -26,5 +74,53 @@ The final table will look like this:

|

||||

|

||||

|

||||

|

||||

If you would like further integrations / support with excel uploads, we are happy to discuss new features. Just [get in touch](https://datacontroller.io/contact).

|

||||

|

||||

|

||||

## Complex Excel Uploads

|

||||

|

||||

Through the use of "Excel Maps" you can dynamically extract individual cells or entire ranges from anywhere within a workbook - either through absolute / relative positioning, or by reference to a "matched" (search) string.

|

||||

|

||||

Configuration is made in the following tables:

|

||||

|

||||

1. [MPE_XLMAP_RULES](/tables/mpe_xlmap_rules) - detailed extraction rules for a particular map

|

||||

2. [MPE_XLMAP_INFO](/tables/mpe_xlmap_info) - optional map-level attributes

|

||||

|

||||

Each [rule](/tables/mpe_xlmap_rules) will extract either a single cell or a rectangular range from the source workbook. The target will be [MPE_XLMAP_DATA](/tables/mpe_xlmap_data), or whichever table is configured in [MPE_XLMAP_INFO](/tables/mpe_xlmap_info).

|

||||

|

||||

To illustrate with an example - consider the following excel. The yellow cells need to be imported.

|

||||

|

||||

|

||||

|

||||

The [MPE_XLMAP_RULES](/tables/mpe_xlmap_rules) configuration entries _might_ (as there are multiple ways) be as follows:

|

||||

|

||||

|XLMAP_ID|XLMAP_RANGE_ID|XLMAP_SHEET|XLMAP_START|XLMAP_FINISH|

|

||||

|---|---|---|---|---|

|

||||

|MAP01|MI_ITEM|Current Month|`MATCH B R[1]C[0]: ITEM`|`LASTDOWN`|

|

||||

|MAP01|MI_AMT|Current Month|`MATCH C R[1]C[0]: AMOUNT`|`LASTDOWN`|

|

||||

|MAP01|TMI|Current Month|`ABSOLUTE F6`||

|

||||

|MAP01|CB|Current Month|`MATCH F R[2]C[0]: CASH BALANCE`||

|

||||

|MAP01|RENT|/1|`MATCH E R[0]C[2]: Rent/mortgage`||

|

||||

|MAP01|CELL|/1|`MATCH E R[0]C[2]: Cell phone`||

|

||||

|

||||

To import the excel, the end user simply needs to navigate to the LOAD tab, choose "Files", select the appropriate map (eg MAP01), and upload. This will stage the new records in [MPE_XLMAP_DATA](/tables/mpe_xlmap_data) which will go through the usual approval process and quality checks. A copy of the source excel file will be attached to each upload.

|

||||

|

||||

The corresponding [MPE_XLMAP_DATA](/tables/mpe_xlmap_data) table will appear as follows:

|

||||

|

||||

| LOAD_REF | XLMAP_ID | XLMAP_RANGE_ID | ROW_NO | COL_NO | VALUE_TXT |

|

||||

|---------------|----------|----------------|--------|--------|-----------------|

|

||||

| DC20231212T154611798_648613_3895 | MAP01 | MI_ITEM | 1 | 1 | Income Source 1 |

|

||||

| DC20231212T154611798_648613_3895 | MAP01 | MI_ITEM | 2 | 1 | Income Source 2 |

|

||||

| DC20231212T154611798_648613_3895 | MAP01 | MI_ITEM | 3 | 1 | Other |

|

||||

| DC20231212T154611798_648613_3895 | MAP01 | MI_AMT | 1 | 1 | 2500 |

|

||||

| DC20231212T154611798_648613_3895 | MAP01 | MI_AMT | 2 | 1 | 1000 |

|

||||

| DC20231212T154611798_648613_3895 | MAP01 | MI_AMT | 3 | 1 | 250 |

|

||||

| DC20231212T154611798_648613_3895 | MAP01 | TMI | 1 | 1 | 3750 |

|

||||

| DC20231212T154611798_648613_3895 | MAP01 | CB | 1 | 1 | 864 |

|

||||

| DC20231212T154611798_648613_3895 | MAP01 | RENT | 1 | 1 | 800 |

|

||||

| DC20231212T154611798_648613_3895 | MAP01 | CELL | 1 | 1 | 45 |

|

||||

|

||||

|

||||

### Video

|

||||

|

||||

<iframe title="Complex Excel Uploads" width="560" height="315" src="https://vid.4gl.io/videos/embed/3338f448-e92d-4822-b3ec-7f6d7530dfc8?peertubeLink=0" frameborder="0" allowfullscreen="" sandbox="allow-same-origin allow-scripts allow-popups"></iframe>

|

||||

|

||||

|

||||

42

docs/files.md

Normal file

@@ -0,0 +1,42 @@

|

||||

# Data Controller for SAS: File Uploads

|

||||

|

||||

Data Controller supports the ingestion of two file formats - Excel (any version) and CSV.

|

||||

|

||||

If you would like to support other file types, do [get in touch](https://datacontroller.io/contact)!

|

||||

|

||||

|

||||

|

||||

## Excel Uploads

|

||||

|

||||

Data can be uploaded in regular (tabular) or dynamic (complex) format. For details, see the [excel](/excel) page.

|

||||

|

||||

|

||||

## CSV Uploads

|

||||

|

||||

The following should be considered when uploading data in this way:

|

||||

|

||||

- A header row (with variable names) is required

|

||||

- Variable names must match those in the target table (not case sensitive). An easy way to ensure this is to download the data from Viewer and use this as a template.

|

||||

- Duplicate variable names are not permitted

|

||||

- Missing columns are not permitted

|

||||

- Additional columns are ignored

|

||||

- The order of variables does not matter EXCEPT for the (optional) `_____DELETE__THIS__RECORD_____` variable. When using this variable, it must be the **first**.

|

||||

- The delimiter is extracted from the header row - so for `var1;var2;var3` the delimeter would be assumed to be a semicolon

|

||||

- The above assumes the delimiter is the first special character! So `var,1;var2;var3` would fail

|

||||

- The following characters should **not** be used as delimiters

|

||||

- doublequote

|

||||

- quote

|

||||

- space

|

||||

- underscore

|

||||

|

||||

When loading dates, be aware that Data Controller makes use of the `ANYDTDTE` and `ANYDTDTTME` informats (width 19).

|

||||

This means that uploaded date / datetime values should be unambiguous (eg `01FEB1942` vs `01/02/42`), to avoid confusion - as the latter could be interpreted as `02JAN2042` depending on your locale and options `YEARCUTOFF` settings. Note that UTC dates with offset values (eg `2018-12-26T09:19:25.123+0100`) are not currently supported. If this is a feature you would like to see, contact us.

|

||||

|

||||

!!! tip

|

||||

To get a copy of a file in the right format for upload, use the [file download](/dc-userguide/#usage) feature in the Viewer tab

|

||||

|

||||

!!! warning

|

||||

Lengths are taken from the target table. If a CSV contains long strings (eg `"ABCDE"` for a $3 variable) then the rest will be silently truncated (only `"ABC"` staged and loaded). If the target variable is a short numeric (eg 4., or 4 bytes) then floats or large integers may be rounded. This issue does not apply to excel uploads, which are first validated in the browser.

|

||||

|

||||

|

||||

When loading CSVs, the entire file is passed to backend for ingestion. This makes it more efficient for large files, but does mean that frontend validations are bypassed.

|

||||

BIN

docs/img/admininfo.png

Normal file

|

After Width: | Height: | Size: 150 KiB |

BIN

docs/img/catalog.png

Normal file

|

After Width: | Height: | Size: 138 KiB |

BIN

docs/img/catalogrefresh.png

Normal file

|

After Width: | Height: | Size: 56 KiB |

BIN

docs/img/datacatalog_cats.png

Normal file

|

After Width: | Height: | Size: 126 KiB |

BIN

docs/img/datacatalog_objs.png

Normal file

|

After Width: | Height: | Size: 178 KiB |

BIN

docs/img/datastatus_cats.png

Normal file

|

After Width: | Height: | Size: 181 KiB |

BIN

docs/img/datastatus_objs.png

Normal file

|

After Width: | Height: | Size: 195 KiB |

|

Before Width: | Height: | Size: 169 KiB After Width: | Height: | Size: 56 KiB |

|

Before Width: | Height: | Size: 331 KiB After Width: | Height: | Size: 93 KiB |

BIN

docs/img/indexhtml_settings.png

Normal file

|

After Width: | Height: | Size: 37 KiB |

BIN

docs/img/mpe_requests.png

Normal file

|

After Width: | Height: | Size: 109 KiB |

BIN

docs/img/mpe_xlmap_data.png

Normal file

|

After Width: | Height: | Size: 150 KiB |

BIN

docs/img/mpe_xlmap_info.png

Normal file

|

After Width: | Height: | Size: 51 KiB |

BIN

docs/img/mpe_xlmap_rules.png

Normal file

|

After Width: | Height: | Size: 184 KiB |

BIN

docs/img/restore.png

Normal file

|

After Width: | Height: | Size: 51 KiB |

BIN

docs/img/revert.png

Normal file

|

After Width: | Height: | Size: 55 KiB |

BIN

docs/img/xlmap_example.png

Normal file

|

After Width: | Height: | Size: 254 KiB |

BIN

docs/img/xltables.png

Normal file

|

After Width: | Height: | Size: 101 KiB |

@@ -29,7 +29,7 @@ The following resources contain additional information on the Data Controller:

|

||||

|

||||

Data Controller is regularly updated with new features. If you see something that is not listed, and we agree it would be useful, you can engage us with Developer Days to build the feature in.

|

||||

|

||||

* [Excel uploads](/dcu-fileupload/#excel-uploads) - drag & drop directly into SAS. All versions of excel supported.

|

||||

* [Excel uploads](/excel) - drag & drop directly into SAS. All versions of excel supported.

|

||||

* Data Lineage - at both table and column level, export as image or CSV

|

||||

* Data Validation Rules - both automatic and user defined

|

||||

* Data Dictionary - map data definitions and ownership

|

||||

|

||||

32

docs/restore.md

Normal file

@@ -0,0 +1,32 @@

|

||||

---

|

||||

layout: article

|

||||

title: Data Restore

|

||||

description: How to restore a previous version of a Data Controller table

|

||||

og_image: https://docs.datacontroller.io/img/restore.png

|

||||

---

|

||||

|

||||

# Data Restore

|

||||

|

||||

For those tables which have [Audit Tracking](/dcc-tables/#audit_libds) enabled, it is possible to restore the data to an earlier state!

|

||||

|

||||

Simply open the submit to be reverted (via HISTORY or the table INFO/VERSIONS screen), and click the red **REVERT** button. This will generate a NEW submission, containing the necessary reversal entries. This new submission **must then be approved** in the usual fashion.

|

||||

|

||||

|

||||

|

||||

This approach means that the audit history remains intact - there is simply a new entry, which reverts all the previous entries.

|

||||

|

||||

## Caveats

|

||||

|

||||

Note that there are some caveats to this feature:

|

||||

|

||||

- User must have EDIT permission

|

||||

- Table must have TXTEMPORAL or UPDATE Load Type

|

||||

- Changes **outside** of Data Controller cannot be reversed

|

||||

- If there are COLUMN or ROW level security rules, the restore will abort

|

||||

- If the model has changed (new / deleted) columns the restore will abort

|

||||

|

||||

## Technical Information

|

||||

|

||||

The restore works by undoing all the changes listed in the [MPE_AUDIT](/tables/mpe_audit/) table. The keys from this table (since and including the version to be restored) are left joined to the base table (to get current values) to create a staging dataset, and then the changes applied in reverse chronological order using [this macro](https://core.sasjs.io/mp__stripdiffs_8sas.html). This staging dataset is then submitted for approval, providing a final sense check before the new / reverted state is applied.

|

||||

|

||||

Source code for the restore process is available [here](https://git.datacontroller.io/dc/dc/src/branch/main/sas/sasjs/services/editors/restore.sas).

|

||||

@@ -1,7 +1,7 @@

|

||||

---

|

||||

layout: article

|

||||

title: Roadmap

|

||||

description: The Data Controller roadmap is aligned with the needs of our customers - we continue to build and prioritise on Features requested by, and funded by, new and existing customers.

|

||||

description: The Data Controller roadmap is aligned with the needs of our customers - we continue to build and prioritise on Features requested by new and existing customers.

|

||||

og_image: https://i.imgur.com/xFGhgg0.png

|

||||

---

|

||||

|

||||

@@ -11,20 +11,16 @@ og_image: https://i.imgur.com/xFGhgg0.png

|

||||

|

||||

On this page you can find details of the Features that have currently been requested, that we agree would add value to the product, and are therefore in our development roadmap.

|

||||

|

||||

Where customers are paying for the new Features (eg with our discounted Developer Days offer), then those Features will always take priority. Where funding is not available, new Features will be addressed during the Bench Time of our developers, and will always be performed after Bug Fixes.

|

||||

|

||||

If you would like to see a new Feature added to Data Controller, then let's have a chat!

|

||||

|

||||

|

||||

## Requested Features

|

||||

|

||||

Where features are requested, whether there is budget or not, we will describe the work below and provide estimates.

|

||||

When features are requested, we will describe the work to be performed in the sections below.

|

||||

|

||||

The following features are currently requested, and awaiting budget:

|

||||

The following features are currently requested:

|

||||

|

||||

* Ability to set 'number of approvals' to zero, enabling instant updates (4 days)

|

||||

* Ability to restore previous versions

|

||||

* Ability to import complex excel data using Excel Maps (10.5 days)

|

||||

* Ability to make automated submissions using an API

|

||||

|

||||

|

||||

@@ -40,67 +36,6 @@ The following changes are necessary to implement this feature:

|

||||

* Updates to documentation

|

||||

* Release Notes

|

||||

|

||||

### Complex Excel Uploads

|

||||

|

||||

When Excel data arrives in multiple ranges, or individual cells, and the cells vary in terms of their column or row identifier, made more "interesting" with the use of merged cells - a rules engine becomes necessary!

|

||||

|

||||

This feature enables the use of "EXCEL MAPS". It will enable multiple tables to be loaded in a single import, and that data can be scattered across multiple sheets / cells / ranges, accessed via the rules described further below.

|

||||

|

||||

The backend SAS tables must still exist, but the column names do not need to appear in the excel file.

|

||||

|

||||

To drive the behaviour, a new configuration table must be added to the control library - MPE_EXCEL_MAP. The columns are defined as follows:

|

||||

|

||||

* **XLMAP_ID** - a unique reference for the excel map

|

||||

* **XLMAP_LIB** - the library of the target table for the data item or range

|

||||

* **XLMAP_DS** - the target table for the data item or range

|

||||

* **XLMAP_COL** - the target column for the data item or range

|

||||

* **XLMAP_SHEET** - the sheet name in which to capture the data. Rules start with a forward slash (/). Example values:

|

||||

* `Sheet2` - an absolute reference

|

||||

* `/FIRST` - the first tab in the workbook

|

||||

* **XLMAP_START** - the rule used to find the top left of the range. Use "R1C1" notation to move the target. Example values:

|

||||

* `ABSOLUTE F4` - an absolute reference

|

||||

* `MATCH P R[0]C[2] |My Test` - search column P for the string "My Test" then move 2 columns right

|

||||

* `MATCH 7 R[-2]C[-1] |Top Banana` - search row 7 for the string "Top Banana" then move 2 rows up and 1 column left

|

||||

* **XLMAP_FINISH** - The rule used to find the end of the range. Leave blank for individual cells. Example values:

|

||||

* `BLANKROW` - search down until a blank row is found, then choose the row above it

|

||||

* `LASTDOWN` - The last non blank cell below the start cell

|

||||

* `RIGHT 3` - select 3 more cells to the right (4 selected in total)

|

||||

|

||||

|

||||

To illustrate with an example - consider the following excel. The yellow cells need to be imported.

|

||||

|

||||