Compare commits

No commits in common. "main" and "gh-pages" have entirely different histories.

@ -1,53 +0,0 @@

|

|||||||

name: Publish to docs.datacontroller.io

|

|

||||||

on:

|

|

||||||

push:

|

|

||||||

branches:

|

|

||||||

- main

|

|

||||||

|

|

||||||

jobs:

|

|

||||||

build:

|

|

||||||

name: Deploy docs

|

|

||||||

runs-on: ubuntu-latest

|

|

||||||

steps:

|

|

||||||

- uses: actions/setup-node@v3

|

|

||||||

with:

|

|

||||||

node-version: 18

|

|

||||||

|

|

||||||

- name: Checkout master

|

|

||||||

uses: actions/checkout@v2

|

|

||||||

|

|

||||||

- name: Setup Python

|

|

||||||

uses: actions/setup-python@v4

|

|

||||||

env:

|

|

||||||

AGENT_TOOLSDIRECTORY: /opt/hostedtoolcache

|

|

||||||

RUNNER_TOOL_CACHE: /opt/hostedtoolcache

|

|

||||||

|

|

||||||

- name: Install pip3

|

|

||||||

run: |

|

|

||||||

apt-get update

|

|

||||||

apt-get install python3-pip -y

|

|

||||||

|

|

||||||

- name: Install Chrome

|

|

||||||

run: |

|

|

||||||

apt-get update

|

|

||||||

wget https://dl.google.com/linux/direct/google-chrome-stable_current_amd64.deb

|

|

||||||

apt install -y ./google-chrome*.deb;

|

|

||||||

export CHROME_BIN=/usr/bin/google-chrome

|

|

||||||

|

|

||||||

- name: Install Surfer

|

|

||||||

run: |

|

|

||||||

npm -g install cloudron-surfer

|

|

||||||

|

|

||||||

- name: build site

|

|

||||||

run: |

|

|

||||||

pip3 install mkdocs

|

|

||||||

pip3 install mkdocs-material

|

|

||||||

pip3 install fontawesome_markdown

|

|

||||||

pip3 install mkdocs-redirects

|

|

||||||

python3 -m mkdocs build --clean

|

|

||||||

mkdir site/slides

|

|

||||||

npx @marp-team/marp-cli slides/innovation/innovation.md -o ./site/slides/innovation/index.html

|

|

||||||

npx @marp-team/marp-cli slides/if/if.md -o site/if.pdf --allow-local-files --html=true

|

|

||||||

|

|

||||||

- name: Deploy docs

|

|

||||||

run: surfer put --token ${{ secrets.SURFERKEY }} --server docs.datacontroller.io site/* /

|

|

||||||

4

.gitignore

vendored

@ -1,4 +0,0 @@

|

|||||||

site/

|

|

||||||

*.swp

|

|

||||||

node_modules/

|

|

||||||

**/.DS_Store

|

|

||||||

@ -1,8 +0,0 @@

|

|||||||

# This configuration file was automatically generated by Gitpod.

|

|

||||||

# Please adjust to your needs (see https://www.gitpod.io/docs/config-gitpod-file)

|

|

||||||

# and commit this file to your remote git repository to share the goodness with others.

|

|

||||||

|

|

||||||

tasks:

|

|

||||||

- init: npm install

|

|

||||||

|

|

||||||

|

|

||||||

5

.vscode/extensions.json

vendored

@ -1,5 +0,0 @@

|

|||||||

{

|

|

||||||

"recommendations": [

|

|

||||||

"marp-team.marp-vscode"

|

|

||||||

]

|

|

||||||

}

|

|

||||||

@ -1,3 +0,0 @@

|

|||||||

Deploy steps in build.sh

|

|

||||||

|

|

||||||

Note that the readme has been renamed to README.txt to prevent github pages from considering it to be the index by default!

|

|

||||||

1275

admin-services/index.html

Normal file

1271

api/index.html

Normal file

BIN

assets/images/favicon.png

Normal file

|

After Width: | Height: | Size: 1.8 KiB |

2

assets/javascripts/bundle.71201edf.min.js

vendored

Normal file

1

assets/javascripts/bundle.71201edf.min.js.map

Normal file

1

assets/javascripts/lunr/min/lunr.ar.min.js

vendored

Normal file

18

assets/javascripts/lunr/min/lunr.da.min.js

vendored

Normal file

@ -0,0 +1,18 @@

|

|||||||

|

/*!

|

||||||

|

* Lunr languages, `Danish` language

|

||||||

|

* https://github.com/MihaiValentin/lunr-languages

|

||||||

|

*

|

||||||

|

* Copyright 2014, Mihai Valentin

|

||||||

|

* http://www.mozilla.org/MPL/

|

||||||

|

*/

|

||||||

|

/*!

|

||||||

|

* based on

|

||||||

|

* Snowball JavaScript Library v0.3

|

||||||

|

* http://code.google.com/p/urim/

|

||||||

|

* http://snowball.tartarus.org/

|

||||||

|

*

|

||||||

|

* Copyright 2010, Oleg Mazko

|

||||||

|

* http://www.mozilla.org/MPL/

|

||||||

|

*/

|

||||||

|

|

||||||

|

!function(e,r){"function"==typeof define&&define.amd?define(r):"object"==typeof exports?module.exports=r():r()(e.lunr)}(this,function(){return function(e){if(void 0===e)throw new Error("Lunr is not present. Please include / require Lunr before this script.");if(void 0===e.stemmerSupport)throw new Error("Lunr stemmer support is not present. Please include / require Lunr stemmer support before this script.");e.da=function(){this.pipeline.reset(),this.pipeline.add(e.da.trimmer,e.da.stopWordFilter,e.da.stemmer),this.searchPipeline&&(this.searchPipeline.reset(),this.searchPipeline.add(e.da.stemmer))},e.da.wordCharacters="A-Za-zªºÀ-ÖØ-öø-ʸˠ-ˤᴀ-ᴥᴬ-ᵜᵢ-ᵥᵫ-ᵷᵹ-ᶾḀ-ỿⁱⁿₐ-ₜKÅℲⅎⅠ-ↈⱠ-ⱿꜢ-ꞇꞋ-ꞭꞰ-ꞷꟷ-ꟿꬰ-ꭚꭜ-ꭤff-stA-Za-z",e.da.trimmer=e.trimmerSupport.generateTrimmer(e.da.wordCharacters),e.Pipeline.registerFunction(e.da.trimmer,"trimmer-da"),e.da.stemmer=function(){var r=e.stemmerSupport.Among,i=e.stemmerSupport.SnowballProgram,n=new function(){function e(){var e,r=f.cursor+3;if(d=f.limit,0<=r&&r<=f.limit){for(a=r;;){if(e=f.cursor,f.in_grouping(w,97,248)){f.cursor=e;break}if(f.cursor=e,e>=f.limit)return;f.cursor++}for(;!f.out_grouping(w,97,248);){if(f.cursor>=f.limit)return;f.cursor++}d=f.cursor,d<a&&(d=a)}}function n(){var e,r;if(f.cursor>=d&&(r=f.limit_backward,f.limit_backward=d,f.ket=f.cursor,e=f.find_among_b(c,32),f.limit_backward=r,e))switch(f.bra=f.cursor,e){case 1:f.slice_del();break;case 2:f.in_grouping_b(p,97,229)&&f.slice_del()}}function t(){var e,r=f.limit-f.cursor;f.cursor>=d&&(e=f.limit_backward,f.limit_backward=d,f.ket=f.cursor,f.find_among_b(l,4)?(f.bra=f.cursor,f.limit_backward=e,f.cursor=f.limit-r,f.cursor>f.limit_backward&&(f.cursor--,f.bra=f.cursor,f.slice_del())):f.limit_backward=e)}function s(){var e,r,i,n=f.limit-f.cursor;if(f.ket=f.cursor,f.eq_s_b(2,"st")&&(f.bra=f.cursor,f.eq_s_b(2,"ig")&&f.slice_del()),f.cursor=f.limit-n,f.cursor>=d&&(r=f.limit_backward,f.limit_backward=d,f.ket=f.cursor,e=f.find_among_b(m,5),f.limit_backward=r,e))switch(f.bra=f.cursor,e){case 1:f.slice_del(),i=f.limit-f.cursor,t(),f.cursor=f.limit-i;break;case 2:f.slice_from("løs")}}function o(){var e;f.cursor>=d&&(e=f.limit_backward,f.limit_backward=d,f.ket=f.cursor,f.out_grouping_b(w,97,248)?(f.bra=f.cursor,u=f.slice_to(u),f.limit_backward=e,f.eq_v_b(u)&&f.slice_del()):f.limit_backward=e)}var a,d,u,c=[new r("hed",-1,1),new r("ethed",0,1),new r("ered",-1,1),new r("e",-1,1),new r("erede",3,1),new r("ende",3,1),new r("erende",5,1),new r("ene",3,1),new r("erne",3,1),new r("ere",3,1),new r("en",-1,1),new r("heden",10,1),new r("eren",10,1),new r("er",-1,1),new r("heder",13,1),new r("erer",13,1),new r("s",-1,2),new r("heds",16,1),new r("es",16,1),new r("endes",18,1),new r("erendes",19,1),new r("enes",18,1),new r("ernes",18,1),new r("eres",18,1),new r("ens",16,1),new r("hedens",24,1),new r("erens",24,1),new r("ers",16,1),new r("ets",16,1),new r("erets",28,1),new r("et",-1,1),new r("eret",30,1)],l=[new r("gd",-1,-1),new r("dt",-1,-1),new r("gt",-1,-1),new r("kt",-1,-1)],m=[new r("ig",-1,1),new r("lig",0,1),new r("elig",1,1),new r("els",-1,1),new r("løst",-1,2)],w=[17,65,16,1,0,0,0,0,0,0,0,0,0,0,0,0,48,0,128],p=[239,254,42,3,0,0,0,0,0,0,0,0,0,0,0,0,16],f=new i;this.setCurrent=function(e){f.setCurrent(e)},this.getCurrent=function(){return f.getCurrent()},this.stem=function(){var r=f.cursor;return e(),f.limit_backward=r,f.cursor=f.limit,n(),f.cursor=f.limit,t(),f.cursor=f.limit,s(),f.cursor=f.limit,o(),!0}};return function(e){return"function"==typeof e.update?e.update(function(e){return n.setCurrent(e),n.stem(),n.getCurrent()}):(n.setCurrent(e),n.stem(),n.getCurrent())}}(),e.Pipeline.registerFunction(e.da.stemmer,"stemmer-da"),e.da.stopWordFilter=e.generateStopWordFilter("ad af alle alt anden at blev blive bliver da de dem den denne der deres det dette dig din disse dog du efter eller en end er et for fra ham han hans har havde have hende hendes her hos hun hvad hvis hvor i ikke ind jeg jer jo kunne man mange med meget men mig min mine mit mod ned noget nogle nu når og også om op os over på selv sig sin sine sit skal skulle som sådan thi til ud under var vi vil ville vor være været".split(" ")),e.Pipeline.registerFunction(e.da.stopWordFilter,"stopWordFilter-da")}});

|

||||||

18

assets/javascripts/lunr/min/lunr.de.min.js

vendored

Normal file

18

assets/javascripts/lunr/min/lunr.du.min.js

vendored

Normal file

18

assets/javascripts/lunr/min/lunr.es.min.js

vendored

Normal file

18

assets/javascripts/lunr/min/lunr.fi.min.js

vendored

Normal file

18

assets/javascripts/lunr/min/lunr.fr.min.js

vendored

Normal file

18

assets/javascripts/lunr/min/lunr.hu.min.js

vendored

Normal file

18

assets/javascripts/lunr/min/lunr.it.min.js

vendored

Normal file

1

assets/javascripts/lunr/min/lunr.ja.min.js

vendored

Normal file

@ -0,0 +1 @@

|

|||||||

|

!function(e,r){"function"==typeof define&&define.amd?define(r):"object"==typeof exports?module.exports=r():r()(e.lunr)}(this,function(){return function(e){if(void 0===e)throw new Error("Lunr is not present. Please include / require Lunr before this script.");if(void 0===e.stemmerSupport)throw new Error("Lunr stemmer support is not present. Please include / require Lunr stemmer support before this script.");var r="2"==e.version[0];e.ja=function(){this.pipeline.reset(),this.pipeline.add(e.ja.trimmer,e.ja.stopWordFilter,e.ja.stemmer),r?this.tokenizer=e.ja.tokenizer:(e.tokenizer&&(e.tokenizer=e.ja.tokenizer),this.tokenizerFn&&(this.tokenizerFn=e.ja.tokenizer))};var t=new e.TinySegmenter;e.ja.tokenizer=function(i){var n,o,s,p,a,u,m,l,c,f;if(!arguments.length||null==i||void 0==i)return[];if(Array.isArray(i))return i.map(function(t){return r?new e.Token(t.toLowerCase()):t.toLowerCase()});for(o=i.toString().toLowerCase().replace(/^\s+/,""),n=o.length-1;n>=0;n--)if(/\S/.test(o.charAt(n))){o=o.substring(0,n+1);break}for(a=[],s=o.length,c=0,l=0;c<=s;c++)if(u=o.charAt(c),m=c-l,u.match(/\s/)||c==s){if(m>0)for(p=t.segment(o.slice(l,c)).filter(function(e){return!!e}),f=l,n=0;n<p.length;n++)r?a.push(new e.Token(p[n],{position:[f,p[n].length],index:a.length})):a.push(p[n]),f+=p[n].length;l=c+1}return a},e.ja.stemmer=function(){return function(e){return e}}(),e.Pipeline.registerFunction(e.ja.stemmer,"stemmer-ja"),e.ja.wordCharacters="一二三四五六七八九十百千万億兆一-龠々〆ヵヶぁ-んァ-ヴーア-ン゙a-zA-Za-zA-Z0-90-9",e.ja.trimmer=e.trimmerSupport.generateTrimmer(e.ja.wordCharacters),e.Pipeline.registerFunction(e.ja.trimmer,"trimmer-ja"),e.ja.stopWordFilter=e.generateStopWordFilter("これ それ あれ この その あの ここ そこ あそこ こちら どこ だれ なに なん 何 私 貴方 貴方方 我々 私達 あの人 あのかた 彼女 彼 です あります おります います は が の に を で え から まで より も どの と し それで しかし".split(" ")),e.Pipeline.registerFunction(e.ja.stopWordFilter,"stopWordFilter-ja"),e.jp=e.ja,e.Pipeline.registerFunction(e.jp.stemmer,"stemmer-jp"),e.Pipeline.registerFunction(e.jp.trimmer,"trimmer-jp"),e.Pipeline.registerFunction(e.jp.stopWordFilter,"stopWordFilter-jp")}});

|

||||||

1

assets/javascripts/lunr/min/lunr.jp.min.js

vendored

Normal file

@ -0,0 +1 @@

|

|||||||

|

module.exports=require("./lunr.ja");

|

||||||

1

assets/javascripts/lunr/min/lunr.multi.min.js

vendored

Normal file

@ -0,0 +1 @@

|

|||||||

|

!function(e,t){"function"==typeof define&&define.amd?define(t):"object"==typeof exports?module.exports=t():t()(e.lunr)}(this,function(){return function(e){e.multiLanguage=function(){for(var t=Array.prototype.slice.call(arguments),i=t.join("-"),r="",n=[],s=[],p=0;p<t.length;++p)"en"==t[p]?(r+="\\w",n.unshift(e.stopWordFilter),n.push(e.stemmer),s.push(e.stemmer)):(r+=e[t[p]].wordCharacters,e[t[p]].stopWordFilter&&n.unshift(e[t[p]].stopWordFilter),e[t[p]].stemmer&&(n.push(e[t[p]].stemmer),s.push(e[t[p]].stemmer)));var o=e.trimmerSupport.generateTrimmer(r);return e.Pipeline.registerFunction(o,"lunr-multi-trimmer-"+i),n.unshift(o),function(){this.pipeline.reset(),this.pipeline.add.apply(this.pipeline,n),this.searchPipeline&&(this.searchPipeline.reset(),this.searchPipeline.add.apply(this.searchPipeline,s))}}}});

|

||||||

18

assets/javascripts/lunr/min/lunr.nl.min.js

vendored

Normal file

18

assets/javascripts/lunr/min/lunr.no.min.js

vendored

Normal file

@ -0,0 +1,18 @@

|

|||||||

|

/*!

|

||||||

|

* Lunr languages, `Norwegian` language

|

||||||

|

* https://github.com/MihaiValentin/lunr-languages

|

||||||

|

*

|

||||||

|

* Copyright 2014, Mihai Valentin

|

||||||

|

* http://www.mozilla.org/MPL/

|

||||||

|

*/

|

||||||

|

/*!

|

||||||

|

* based on

|

||||||

|

* Snowball JavaScript Library v0.3

|

||||||

|

* http://code.google.com/p/urim/

|

||||||

|

* http://snowball.tartarus.org/

|

||||||

|

*

|

||||||

|

* Copyright 2010, Oleg Mazko

|

||||||

|

* http://www.mozilla.org/MPL/

|

||||||

|

*/

|

||||||

|

|

||||||

|

!function(e,r){"function"==typeof define&&define.amd?define(r):"object"==typeof exports?module.exports=r():r()(e.lunr)}(this,function(){return function(e){if(void 0===e)throw new Error("Lunr is not present. Please include / require Lunr before this script.");if(void 0===e.stemmerSupport)throw new Error("Lunr stemmer support is not present. Please include / require Lunr stemmer support before this script.");e.no=function(){this.pipeline.reset(),this.pipeline.add(e.no.trimmer,e.no.stopWordFilter,e.no.stemmer),this.searchPipeline&&(this.searchPipeline.reset(),this.searchPipeline.add(e.no.stemmer))},e.no.wordCharacters="A-Za-zªºÀ-ÖØ-öø-ʸˠ-ˤᴀ-ᴥᴬ-ᵜᵢ-ᵥᵫ-ᵷᵹ-ᶾḀ-ỿⁱⁿₐ-ₜKÅℲⅎⅠ-ↈⱠ-ⱿꜢ-ꞇꞋ-ꞭꞰ-ꞷꟷ-ꟿꬰ-ꭚꭜ-ꭤff-stA-Za-z",e.no.trimmer=e.trimmerSupport.generateTrimmer(e.no.wordCharacters),e.Pipeline.registerFunction(e.no.trimmer,"trimmer-no"),e.no.stemmer=function(){var r=e.stemmerSupport.Among,n=e.stemmerSupport.SnowballProgram,i=new function(){function e(){var e,r=w.cursor+3;if(a=w.limit,0<=r||r<=w.limit){for(s=r;;){if(e=w.cursor,w.in_grouping(d,97,248)){w.cursor=e;break}if(e>=w.limit)return;w.cursor=e+1}for(;!w.out_grouping(d,97,248);){if(w.cursor>=w.limit)return;w.cursor++}a=w.cursor,a<s&&(a=s)}}function i(){var e,r,n;if(w.cursor>=a&&(r=w.limit_backward,w.limit_backward=a,w.ket=w.cursor,e=w.find_among_b(m,29),w.limit_backward=r,e))switch(w.bra=w.cursor,e){case 1:w.slice_del();break;case 2:n=w.limit-w.cursor,w.in_grouping_b(c,98,122)?w.slice_del():(w.cursor=w.limit-n,w.eq_s_b(1,"k")&&w.out_grouping_b(d,97,248)&&w.slice_del());break;case 3:w.slice_from("er")}}function t(){var e,r=w.limit-w.cursor;w.cursor>=a&&(e=w.limit_backward,w.limit_backward=a,w.ket=w.cursor,w.find_among_b(u,2)?(w.bra=w.cursor,w.limit_backward=e,w.cursor=w.limit-r,w.cursor>w.limit_backward&&(w.cursor--,w.bra=w.cursor,w.slice_del())):w.limit_backward=e)}function o(){var e,r;w.cursor>=a&&(r=w.limit_backward,w.limit_backward=a,w.ket=w.cursor,e=w.find_among_b(l,11),e?(w.bra=w.cursor,w.limit_backward=r,1==e&&w.slice_del()):w.limit_backward=r)}var s,a,m=[new r("a",-1,1),new r("e",-1,1),new r("ede",1,1),new r("ande",1,1),new r("ende",1,1),new r("ane",1,1),new r("ene",1,1),new r("hetene",6,1),new r("erte",1,3),new r("en",-1,1),new r("heten",9,1),new r("ar",-1,1),new r("er",-1,1),new r("heter",12,1),new r("s",-1,2),new r("as",14,1),new r("es",14,1),new r("edes",16,1),new r("endes",16,1),new r("enes",16,1),new r("hetenes",19,1),new r("ens",14,1),new r("hetens",21,1),new r("ers",14,1),new r("ets",14,1),new r("et",-1,1),new r("het",25,1),new r("ert",-1,3),new r("ast",-1,1)],u=[new r("dt",-1,-1),new r("vt",-1,-1)],l=[new r("leg",-1,1),new r("eleg",0,1),new r("ig",-1,1),new r("eig",2,1),new r("lig",2,1),new r("elig",4,1),new r("els",-1,1),new r("lov",-1,1),new r("elov",7,1),new r("slov",7,1),new r("hetslov",9,1)],d=[17,65,16,1,0,0,0,0,0,0,0,0,0,0,0,0,48,0,128],c=[119,125,149,1],w=new n;this.setCurrent=function(e){w.setCurrent(e)},this.getCurrent=function(){return w.getCurrent()},this.stem=function(){var r=w.cursor;return e(),w.limit_backward=r,w.cursor=w.limit,i(),w.cursor=w.limit,t(),w.cursor=w.limit,o(),!0}};return function(e){return"function"==typeof e.update?e.update(function(e){return i.setCurrent(e),i.stem(),i.getCurrent()}):(i.setCurrent(e),i.stem(),i.getCurrent())}}(),e.Pipeline.registerFunction(e.no.stemmer,"stemmer-no"),e.no.stopWordFilter=e.generateStopWordFilter("alle at av bare begge ble blei bli blir blitt både båe da de deg dei deim deira deires dem den denne der dere deres det dette di din disse ditt du dykk dykkar då eg ein eit eitt eller elles en enn er et ett etter for fordi fra før ha hadde han hans har hennar henne hennes her hjå ho hoe honom hoss hossen hun hva hvem hver hvilke hvilken hvis hvor hvordan hvorfor i ikke ikkje ikkje ingen ingi inkje inn inni ja jeg kan kom korleis korso kun kunne kva kvar kvarhelst kven kvi kvifor man mange me med medan meg meget mellom men mi min mine mitt mot mykje ned no noe noen noka noko nokon nokor nokre nå når og også om opp oss over på samme seg selv si si sia sidan siden sin sine sitt sjøl skal skulle slik so som som somme somt så sånn til um upp ut uten var vart varte ved vere verte vi vil ville vore vors vort vår være være vært å".split(" ")),e.Pipeline.registerFunction(e.no.stopWordFilter,"stopWordFilter-no")}});

|

||||||

18

assets/javascripts/lunr/min/lunr.pt.min.js

vendored

Normal file

18

assets/javascripts/lunr/min/lunr.ro.min.js

vendored

Normal file

18

assets/javascripts/lunr/min/lunr.ru.min.js

vendored

Normal file

1

assets/javascripts/lunr/min/lunr.stemmer.support.min.js

vendored

Normal file

@ -0,0 +1 @@

|

|||||||

|

!function(r,t){"function"==typeof define&&define.amd?define(t):"object"==typeof exports?module.exports=t():t()(r.lunr)}(this,function(){return function(r){r.stemmerSupport={Among:function(r,t,i,s){if(this.toCharArray=function(r){for(var t=r.length,i=new Array(t),s=0;s<t;s++)i[s]=r.charCodeAt(s);return i},!r&&""!=r||!t&&0!=t||!i)throw"Bad Among initialisation: s:"+r+", substring_i: "+t+", result: "+i;this.s_size=r.length,this.s=this.toCharArray(r),this.substring_i=t,this.result=i,this.method=s},SnowballProgram:function(){var r;return{bra:0,ket:0,limit:0,cursor:0,limit_backward:0,setCurrent:function(t){r=t,this.cursor=0,this.limit=t.length,this.limit_backward=0,this.bra=this.cursor,this.ket=this.limit},getCurrent:function(){var t=r;return r=null,t},in_grouping:function(t,i,s){if(this.cursor<this.limit){var e=r.charCodeAt(this.cursor);if(e<=s&&e>=i&&(e-=i,t[e>>3]&1<<(7&e)))return this.cursor++,!0}return!1},in_grouping_b:function(t,i,s){if(this.cursor>this.limit_backward){var e=r.charCodeAt(this.cursor-1);if(e<=s&&e>=i&&(e-=i,t[e>>3]&1<<(7&e)))return this.cursor--,!0}return!1},out_grouping:function(t,i,s){if(this.cursor<this.limit){var e=r.charCodeAt(this.cursor);if(e>s||e<i)return this.cursor++,!0;if(e-=i,!(t[e>>3]&1<<(7&e)))return this.cursor++,!0}return!1},out_grouping_b:function(t,i,s){if(this.cursor>this.limit_backward){var e=r.charCodeAt(this.cursor-1);if(e>s||e<i)return this.cursor--,!0;if(e-=i,!(t[e>>3]&1<<(7&e)))return this.cursor--,!0}return!1},eq_s:function(t,i){if(this.limit-this.cursor<t)return!1;for(var s=0;s<t;s++)if(r.charCodeAt(this.cursor+s)!=i.charCodeAt(s))return!1;return this.cursor+=t,!0},eq_s_b:function(t,i){if(this.cursor-this.limit_backward<t)return!1;for(var s=0;s<t;s++)if(r.charCodeAt(this.cursor-t+s)!=i.charCodeAt(s))return!1;return this.cursor-=t,!0},find_among:function(t,i){for(var s=0,e=i,n=this.cursor,u=this.limit,o=0,h=0,c=!1;;){for(var a=s+(e-s>>1),f=0,l=o<h?o:h,_=t[a],m=l;m<_.s_size;m++){if(n+l==u){f=-1;break}if(f=r.charCodeAt(n+l)-_.s[m])break;l++}if(f<0?(e=a,h=l):(s=a,o=l),e-s<=1){if(s>0||e==s||c)break;c=!0}}for(;;){var _=t[s];if(o>=_.s_size){if(this.cursor=n+_.s_size,!_.method)return _.result;var b=_.method();if(this.cursor=n+_.s_size,b)return _.result}if((s=_.substring_i)<0)return 0}},find_among_b:function(t,i){for(var s=0,e=i,n=this.cursor,u=this.limit_backward,o=0,h=0,c=!1;;){for(var a=s+(e-s>>1),f=0,l=o<h?o:h,_=t[a],m=_.s_size-1-l;m>=0;m--){if(n-l==u){f=-1;break}if(f=r.charCodeAt(n-1-l)-_.s[m])break;l++}if(f<0?(e=a,h=l):(s=a,o=l),e-s<=1){if(s>0||e==s||c)break;c=!0}}for(;;){var _=t[s];if(o>=_.s_size){if(this.cursor=n-_.s_size,!_.method)return _.result;var b=_.method();if(this.cursor=n-_.s_size,b)return _.result}if((s=_.substring_i)<0)return 0}},replace_s:function(t,i,s){var e=s.length-(i-t),n=r.substring(0,t),u=r.substring(i);return r=n+s+u,this.limit+=e,this.cursor>=i?this.cursor+=e:this.cursor>t&&(this.cursor=t),e},slice_check:function(){if(this.bra<0||this.bra>this.ket||this.ket>this.limit||this.limit>r.length)throw"faulty slice operation"},slice_from:function(r){this.slice_check(),this.replace_s(this.bra,this.ket,r)},slice_del:function(){this.slice_from("")},insert:function(r,t,i){var s=this.replace_s(r,t,i);r<=this.bra&&(this.bra+=s),r<=this.ket&&(this.ket+=s)},slice_to:function(){return this.slice_check(),r.substring(this.bra,this.ket)},eq_v_b:function(r){return this.eq_s_b(r.length,r)}}}},r.trimmerSupport={generateTrimmer:function(r){var t=new RegExp("^[^"+r+"]+"),i=new RegExp("[^"+r+"]+$");return function(r){return"function"==typeof r.update?r.update(function(r){return r.replace(t,"").replace(i,"")}):r.replace(t,"").replace(i,"")}}}}});

|

||||||

18

assets/javascripts/lunr/min/lunr.sv.min.js

vendored

Normal file

@ -0,0 +1,18 @@

|

|||||||

|

/*!

|

||||||

|

* Lunr languages, `Swedish` language

|

||||||

|

* https://github.com/MihaiValentin/lunr-languages

|

||||||

|

*

|

||||||

|

* Copyright 2014, Mihai Valentin

|

||||||

|

* http://www.mozilla.org/MPL/

|

||||||

|

*/

|

||||||

|

/*!

|

||||||

|

* based on

|

||||||

|

* Snowball JavaScript Library v0.3

|

||||||

|

* http://code.google.com/p/urim/

|

||||||

|

* http://snowball.tartarus.org/

|

||||||

|

*

|

||||||

|

* Copyright 2010, Oleg Mazko

|

||||||

|

* http://www.mozilla.org/MPL/

|

||||||

|

*/

|

||||||

|

|

||||||

|

!function(e,r){"function"==typeof define&&define.amd?define(r):"object"==typeof exports?module.exports=r():r()(e.lunr)}(this,function(){return function(e){if(void 0===e)throw new Error("Lunr is not present. Please include / require Lunr before this script.");if(void 0===e.stemmerSupport)throw new Error("Lunr stemmer support is not present. Please include / require Lunr stemmer support before this script.");e.sv=function(){this.pipeline.reset(),this.pipeline.add(e.sv.trimmer,e.sv.stopWordFilter,e.sv.stemmer),this.searchPipeline&&(this.searchPipeline.reset(),this.searchPipeline.add(e.sv.stemmer))},e.sv.wordCharacters="A-Za-zªºÀ-ÖØ-öø-ʸˠ-ˤᴀ-ᴥᴬ-ᵜᵢ-ᵥᵫ-ᵷᵹ-ᶾḀ-ỿⁱⁿₐ-ₜKÅℲⅎⅠ-ↈⱠ-ⱿꜢ-ꞇꞋ-ꞭꞰ-ꞷꟷ-ꟿꬰ-ꭚꭜ-ꭤff-stA-Za-z",e.sv.trimmer=e.trimmerSupport.generateTrimmer(e.sv.wordCharacters),e.Pipeline.registerFunction(e.sv.trimmer,"trimmer-sv"),e.sv.stemmer=function(){var r=e.stemmerSupport.Among,n=e.stemmerSupport.SnowballProgram,t=new function(){function e(){var e,r=w.cursor+3;if(o=w.limit,0<=r||r<=w.limit){for(a=r;;){if(e=w.cursor,w.in_grouping(l,97,246)){w.cursor=e;break}if(w.cursor=e,w.cursor>=w.limit)return;w.cursor++}for(;!w.out_grouping(l,97,246);){if(w.cursor>=w.limit)return;w.cursor++}o=w.cursor,o<a&&(o=a)}}function t(){var e,r=w.limit_backward;if(w.cursor>=o&&(w.limit_backward=o,w.cursor=w.limit,w.ket=w.cursor,e=w.find_among_b(u,37),w.limit_backward=r,e))switch(w.bra=w.cursor,e){case 1:w.slice_del();break;case 2:w.in_grouping_b(d,98,121)&&w.slice_del()}}function i(){var e=w.limit_backward;w.cursor>=o&&(w.limit_backward=o,w.cursor=w.limit,w.find_among_b(c,7)&&(w.cursor=w.limit,w.ket=w.cursor,w.cursor>w.limit_backward&&(w.bra=--w.cursor,w.slice_del())),w.limit_backward=e)}function s(){var e,r;if(w.cursor>=o){if(r=w.limit_backward,w.limit_backward=o,w.cursor=w.limit,w.ket=w.cursor,e=w.find_among_b(m,5))switch(w.bra=w.cursor,e){case 1:w.slice_del();break;case 2:w.slice_from("lös");break;case 3:w.slice_from("full")}w.limit_backward=r}}var a,o,u=[new r("a",-1,1),new r("arna",0,1),new r("erna",0,1),new r("heterna",2,1),new r("orna",0,1),new r("ad",-1,1),new r("e",-1,1),new r("ade",6,1),new r("ande",6,1),new r("arne",6,1),new r("are",6,1),new r("aste",6,1),new r("en",-1,1),new r("anden",12,1),new r("aren",12,1),new r("heten",12,1),new r("ern",-1,1),new r("ar",-1,1),new r("er",-1,1),new r("heter",18,1),new r("or",-1,1),new r("s",-1,2),new r("as",21,1),new r("arnas",22,1),new r("ernas",22,1),new r("ornas",22,1),new r("es",21,1),new r("ades",26,1),new r("andes",26,1),new r("ens",21,1),new r("arens",29,1),new r("hetens",29,1),new r("erns",21,1),new r("at",-1,1),new r("andet",-1,1),new r("het",-1,1),new r("ast",-1,1)],c=[new r("dd",-1,-1),new r("gd",-1,-1),new r("nn",-1,-1),new r("dt",-1,-1),new r("gt",-1,-1),new r("kt",-1,-1),new r("tt",-1,-1)],m=[new r("ig",-1,1),new r("lig",0,1),new r("els",-1,1),new r("fullt",-1,3),new r("löst",-1,2)],l=[17,65,16,1,0,0,0,0,0,0,0,0,0,0,0,0,24,0,32],d=[119,127,149],w=new n;this.setCurrent=function(e){w.setCurrent(e)},this.getCurrent=function(){return w.getCurrent()},this.stem=function(){var r=w.cursor;return e(),w.limit_backward=r,w.cursor=w.limit,t(),w.cursor=w.limit,i(),w.cursor=w.limit,s(),!0}};return function(e){return"function"==typeof e.update?e.update(function(e){return t.setCurrent(e),t.stem(),t.getCurrent()}):(t.setCurrent(e),t.stem(),t.getCurrent())}}(),e.Pipeline.registerFunction(e.sv.stemmer,"stemmer-sv"),e.sv.stopWordFilter=e.generateStopWordFilter("alla allt att av blev bli blir blivit de dem den denna deras dess dessa det detta dig din dina ditt du där då efter ej eller en er era ert ett från för ha hade han hans har henne hennes hon honom hur här i icke ingen inom inte jag ju kan kunde man med mellan men mig min mina mitt mot mycket ni nu när någon något några och om oss på samma sedan sig sin sina sitta själv skulle som så sådan sådana sådant till under upp ut utan vad var vara varför varit varje vars vart vem vi vid vilka vilkas vilken vilket vår våra vårt än är åt över".split(" ")),e.Pipeline.registerFunction(e.sv.stopWordFilter,"stopWordFilter-sv")}});

|

||||||

18

assets/javascripts/lunr/min/lunr.tr.min.js

vendored

Normal file

1

assets/javascripts/lunr/min/lunr.vi.min.js

vendored

Normal file

@ -0,0 +1 @@

|

|||||||

|

!function(e,r){"function"==typeof define&&define.amd?define(r):"object"==typeof exports?module.exports=r():r()(e.lunr)}(this,function(){return function(e){if(void 0===e)throw new Error("Lunr is not present. Please include / require Lunr before this script.");if(void 0===e.stemmerSupport)throw new Error("Lunr stemmer support is not present. Please include / require Lunr stemmer support before this script.");e.vi=function(){this.pipeline.reset(),this.pipeline.add(e.vi.stopWordFilter,e.vi.trimmer)},e.vi.wordCharacters="[A-Za-ẓ̀͐́͑̉̃̓ÂâÊêÔôĂ-ăĐ-đƠ-ơƯ-ư]",e.vi.trimmer=e.trimmerSupport.generateTrimmer(e.vi.wordCharacters),e.Pipeline.registerFunction(e.vi.trimmer,"trimmer-vi"),e.vi.stopWordFilter=e.generateStopWordFilter("là cái nhưng mà".split(" "))}});

|

||||||

1

assets/javascripts/lunr/tinyseg.min.js

vendored

Normal file

30

assets/javascripts/vendor.6a3d08fc.min.js

vendored

Normal file

1

assets/javascripts/vendor.6a3d08fc.min.js.map

Normal file

59

assets/javascripts/worker/search.4ac00218.min.js

vendored

Normal file

1

assets/javascripts/worker/search.4ac00218.min.js.map

Normal file

3

assets/stylesheets/main.bc7e593a.min.css

vendored

Normal file

1

assets/stylesheets/main.bc7e593a.min.css.map

Normal file

3

assets/stylesheets/palette.ab28b872.min.css

vendored

Normal file

1

assets/stylesheets/palette.ab28b872.min.css.map

Normal file

38

build.sh

@ -1,38 +0,0 @@

|

|||||||

#!/bin/bash

|

|

||||||

####################################################################

|

|

||||||

# PROJECT: Data Controller Docs #

|

|

||||||

####################################################################

|

|

||||||

|

|

||||||

## Create regular mkdocs docs

|

|

||||||

|

|

||||||

echo 'extracting licences'

|

|

||||||

|

|

||||||

OUTFILE='docs/licences.md'

|

|

||||||

|

|

||||||

cat > $OUTFILE <<'EOL'

|

|

||||||

<!-- this page is AUTOMATICALLY updated!! -->

|

|

||||||

# Data Controller for SAS® - Source Licences

|

|

||||||

|

|

||||||

## Overview

|

|

||||||

Data Controller source licences are extracted automatically from our repo using the [license-checker](https://www.npmjs.com/package/license-checker) NPM module

|

|

||||||

|

|

||||||

```

|

|

||||||

EOL

|

|

||||||

|

|

||||||

license-checker --production --relativeLicensePath --direct --start ../dcfrontend >> docs/licences.md

|

|

||||||

|

|

||||||

echo '```' >> docs/licences.md

|

|

||||||

|

|

||||||

echo 'building mkdocs'

|

|

||||||

pip3 install mkdocs

|

|

||||||

pip3 install mkdocs-material

|

|

||||||

pip3 install fontawesome_markdown

|

|

||||||

python3 -m mkdocs build --clean

|

|

||||||

|

|

||||||

#mkdocs serve

|

|

||||||

|

|

||||||

# update slides

|

|

||||||

mkdir site/slides

|

|

||||||

npx @marp-team/marp-cli slides/innovation/innovation.md -o ./site/slides/innovation/index.html

|

|

||||||

|

|

||||||

rsync -avz --exclude .git/ --del -e "ssh -p 722" site/ macropeo@77.72.0.226:/home/macropeo/docs.datacontroller.io

|

|

||||||

1453

column-level-security/index.html

Normal file

1318

dc-overview/index.html

Normal file

1359

dc-userguide/index.html

Normal file

|

Before Width: | Height: | Size: 1.0 MiB After Width: | Height: | Size: 1.0 MiB |

1180

dcc-dates/index.html

Normal file

1176

dcc-groups/index.html

Normal file

1373

dcc-options/index.html

Normal file

1269

dcc-security/index.html

Normal file

1262

dcc-selectbox/index.html

Normal file

1594

dcc-tables/index.html

Normal file

1276

dcc-validations/index.html

Normal file

1444

dci-deploysas9/index.html

Normal file

1469

dci-deploysasviya/index.html

Normal file

15

dci-evaluation/index.html

Normal file

@ -0,0 +1,15 @@

|

|||||||

|

|

||||||

|

<!doctype html>

|

||||||

|

<html lang="en">

|

||||||

|

<head>

|

||||||

|

<meta charset="utf-8">

|

||||||

|

<title>Redirecting...</title>

|

||||||

|

<link rel="canonical" href="/dci-deploysas9/">

|

||||||

|

<meta name="robots" content="noindex">

|

||||||

|

<script>var anchor=window.location.hash.substr(1);location.href="/dci-deploysas9/"+(anchor?"#"+anchor:"")</script>

|

||||||

|

<meta http-equiv="refresh" content="0; url=/dci-deploysas9/">

|

||||||

|

</head>

|

||||||

|

<body>

|

||||||

|

Redirecting...

|

||||||

|

</body>

|

||||||

|

</html>

|

||||||

1245

dci-requirements/index.html

Normal file

1422

dci-stpinstance/index.html

Normal file

1309

dci-troubleshooting/index.html

Normal file

1237

dcu-datacatalog/index.html

Normal file

1225

dcu-fileupload/index.html

Normal file

1197

dcu-lineage/index.html

Normal file

1231

dcu-tableviewer/index.html

Normal file

@ -1,98 +0,0 @@

|

|||||||

---

|

|

||||||

layout: article

|

|

||||||

title: Admin Services

|

|

||||||

description: Data Controller contains a number of admin-only web services, such as DB Export, Lineage Generation, and Data Catalog refresh.

|

|

||||||

---

|

|

||||||

|

|

||||||

# Admin Services

|

|

||||||

|

|

||||||

Several web services have been defined to provide additional functionality outside of the user interface. These somewhat-hidden services must be called directly, using a web browser.

|

|

||||||

|

|

||||||

In a future version, these features will be made available from an Admin screen (so, no need to manually modify URLs).

|

|

||||||

|

|

||||||

The URL is made up of several components:

|

|

||||||

|

|

||||||

* `SERVERURL` -> the domain (and port) on which your SAS server resides

|

|

||||||

* `EXECUTOR` -> Either `SASStoredProcess` for SAS 9, else `SASJobExecution` for Viya

|

|

||||||

* `APPLOC` -> The root folder location in which the Data Controller backend services were deployed

|

|

||||||

* `SERVICE` -> The actual Data Controller service being described. May include additional parameters.

|

|

||||||

|

|

||||||

To illustrate the above, consider the following URL:

|

|

||||||

|

|

||||||

[https://viya.4gl.io/SASJobExecution/?_program=/Public/app/viya/services/admin/exportdb&flavour=PGSQL](https://viya.4gl.io/SASJobExecution/?_program=/Public/app/viya/services/admin/exportdb&flavour=PGSQL

|

|

||||||

)

|

|

||||||

|

|

||||||

This is broken down into:

|

|

||||||

|

|

||||||

* `$SERVERURL` = `https://sas.analytium.co.uk`

|

|

||||||

* `$EXECUTOR` = `SASJobExecution`

|

|

||||||

* `$APPLOC` = `/Public/app/dc`

|

|

||||||

* `$SERVICE` = `services/admin/exportdb&flavour=PGSQL`

|

|

||||||

|

|

||||||

The below sections will only describe the `$SERVICE` component - you may construct this into a URL as follows:

|

|

||||||

|

|

||||||

* `$SERVERURL/$EXECUTOR?_program=$APPLOC/$SERVICE`

|

|

||||||

|

|

||||||

## Export Config

|

|

||||||

|

|

||||||

This service will provide a zip file containing the current database configuration. This is useful for migrating to a different data controller database instance.

|

|

||||||

|

|

||||||

EXAMPLE:

|

|

||||||

|

|

||||||

* `services/admin/exportconfig`

|

|

||||||

|

|

||||||

## Export Database

|

|

||||||

Exports the data controller control library in DB specific DDL. The following URL parameters may be added:

|

|

||||||

|

|

||||||

* `&flavour=` (only PGSQL supported at this time)

|

|

||||||

* `&schema=` (optional, if target schema is needed)

|

|

||||||

|

|

||||||

EXAMPLES:

|

|

||||||

|

|

||||||

* `services/admin/exportdb&flavour=PGSQL&schema=DC`

|

|

||||||

* `services/admin/exportdb&flavour=PGSQL`

|

|

||||||

|

|

||||||

## Refresh Data Catalog

|

|

||||||

|

|

||||||

In any SAS estate, it's unlikely the size & shape of data will remain static. By running a regular Catalog Scan, you can track changes such as:

|

|

||||||

|

|

||||||

- Library Properties (size, schema, path, number of tables)

|

|

||||||

- Table Properties (size, number of columns, primary keys)

|

|

||||||

- Variable Properties (presence in a primary key, constraints, position in the dataset)

|

|

||||||

|

|

||||||

The data is stored with SCD2 so you can actually **track changes to your model over time**! Curious when that new column appeared? Just check the history in [MPE_DATACATALOG_TABS](/tables/mpe_datacatalog_tabs).

|

|

||||||

|

|

||||||

To run the refresh process, just trigger the stored process, eg below:

|

|

||||||

|

|

||||||

* `services/admin/refreshcatalog`

|

|

||||||

* `services/admin/refreshcatalog&libref=MYLIB`

|

|

||||||

|

|

||||||

The optional `&libref=` parameter allows you to run the process for a single library. Just provide the libref.

|

|

||||||

|

|

||||||

When doing a full scan, the following LIBREFS are ignored:

|

|

||||||

|

|

||||||

* 'CASUSER'

|

|

||||||

* 'MAPSGFK'

|

|

||||||

* 'SASUSER'

|

|

||||||

* 'SASWORK

|

|

||||||

* 'STPSAMP'

|

|

||||||

* 'TEMP'

|

|

||||||

* `WORK'

|

|

||||||

|

|

||||||

Additional LIBREFs can be excluded by adding them to the `DCXXXX.MPE_CONFIG` table (where `var_scope='DC_CATALOG' and var_name='DC_IGNORELIBS'`). Use a pipe (`|`) symbol to seperate them. This can be useful where there are connection issues for a particular library.

|

|

||||||

|

|

||||||

Be aware that the scan process can take a long time if you have a lot of tables!

|

|

||||||

|

|

||||||

Output tables (all SCD2):

|

|

||||||

|

|

||||||

* [MPE_DATACATALOG_LIBS](/tables/mpe_datacatalog_libs) - Library attributes

|

|

||||||

* [MPE_DATACATALOG_TABS](/tables/mpe_datacatalog_tabs) - Table attributes

|

|

||||||

* [MPE_DATACATALOG_VARS](/tables/mpe_datacatalog_vars) - Column attributes

|

|

||||||

* [MPE_DATASTATUS_LIBS](/tables/mpe_datastatus_libs) - Frequently changing library attributes (such as size & number of tables)

|

|

||||||

* [MPE_DATASTATUS_TABS](/tables/mpe_datastatus_tabs) - Frequently changing table attributes (such as size & number of rows)

|

|

||||||

|

|

||||||

## Update Licence Key

|

|

||||||

|

|

||||||

Whenever navigating Data Controller, there is always a hash (`#`) in the URL. To access the licence key screen, remove all content to the RIGHT of the hash and add the following string: `/licensing/update`.

|

|

||||||

|

|

||||||

If you are using https protocol, you will have 2 keys (licence key / activation key). In http mode, there is just one key (licence key) for both boxes.

|

|

||||||

102

docs/api.md

@ -1,102 +0,0 @@

|

|||||||

---

|

|

||||||

layout: article

|

|

||||||

title: API

|

|

||||||

description: The Data Controller API provides a machine-programmable interface for loading spreadsheets into SAS

|

|

||||||

---

|

|

||||||

|

|

||||||

!!! warning

|

|

||||||

Work in Progress!

|

|

||||||

|

|

||||||

# API

|

|

||||||

|

|

||||||

Where a project has a requirement to load Excel Files automatically into SAS, from a remote machine, an API approach is desirable for many reasons:

|

|

||||||

|

|

||||||

* Security. Client access can be limited to just the endpoints they need (rather than being granted full server access).

|

|

||||||

* Flexibility. Well documented, stable APIs allow consumers to build and extend additional products and solutions.

|

|

||||||

* Cost. API solutions are typically self-contained, quick to implement, and easy to learn.

|

|

||||||

|

|

||||||

A Data Controller API would enable teams across an entire enterprise to easily and securely send data to SAS in a transparent and fully automated fashion.

|

|

||||||

|

|

||||||

The API would also benefit from all of Data Controllers existing [data validation](https://docs.datacontroller.io/dcc-validations/) logic (both frontend and backend), data auditing, [alerts](https://docs.datacontroller.io/emails/), and [security control](https://docs.datacontroller.io/dcc-security/) features.

|

|

||||||

|

|

||||||

It is however, a significant departure from the existing "SAS Content" based deployment, in the following ways:

|

|

||||||

|

|

||||||

1. Server Driven. A machine is required on which to launch, and run, the API application itself.

|

|

||||||

2. Fully Automated. There is no browser, or interface, or - human, involved.

|

|

||||||

3. Extends outside of SAS. There are firewalls, and authentication methods, to consider.

|

|

||||||

|

|

||||||

The Data Controller technical solution will differ, depending on the type of SAS Platform being used. There are three types of SAS Platform:

|

|

||||||

|

|

||||||

1. Foundation SAS - regular, Base SAS.

|

|

||||||

2. SAS EBI - with Metadata.

|

|

||||||

3. SAS Viya - cloud enabled.

|

|

||||||

|

|

||||||

And there are three main options when it comes to building APIs on SAS:

|

|

||||||

|

|

||||||

1. Standalone DC API (Viya Only). Viya comes with [REST APIs](https://developer.sas.com/apis/rest/) out of the box, no middleware needed.

|

|

||||||

2. [SAS 9 API](https://github.com/analytium/sas9api). This is an open-source Java Application, using SAS Authentication.

|

|

||||||

3. [SASjs Server](https://github.com/sasjs/server). An open source NodeJS application, compatible with all major authentication methods and all versions of SAS

|

|

||||||

|

|

||||||

An additional REST API option for SAS EBI might have been [BI Web Services](https://documentation.sas.com/doc/en/bicdc/9.4/bimtag/p1acycjd86du2hn11czxuog9x0ra.htm), however - it requires platform changes and is not highly secure.

|

|

||||||

|

|

||||||

The compatibility matrix is therefore as follows:

|

|

||||||

|

|

||||||

| Product | Foundation SAS| SAS EBI | SAS VIYA |

|

|

||||||

|---|---|---|---|

|

|

||||||

| DCAPI | ❌ | ❌ | ✅ |

|

|

||||||

| DCAPI + SASjs Server | ✅ | ✅ | ✅ |

|

|

||||||

| DCAPI + SAS 9 API | ❌ | ✅ | ❌ |

|

|

||||||

|

|

||||||

In all cases, a Data Controller API will be surfaced, that makes use of the underlying (raw) API server.

|

|

||||||

|

|

||||||

The following sections break down these options, and the work remaining to make them a reality.

|

|

||||||

|

|

||||||

## Standalone DC API (Viya Only)

|

|

||||||

|

|

||||||

For Viya, the investment necessary is relatively low, thanks to the API-first nature of the platform. In addition, the SASjs framework already provides most of the necessary functionality - such as authentication, service execution, handling results & logs, etc. Finally, the Data Controller team have already built an API Bridge (specific to another customer, hence the building blocks are in place).

|

|

||||||

|

|

||||||

The work to complete the Viya version of the API is as follows:

|

|

||||||

|

|

||||||

* Authorisation interface

|

|

||||||

* Creation of API services

|

|

||||||

* Tests & Automated Deployments

|

|

||||||

* Developer docs

|

|

||||||

* Swagger API

|

|

||||||

* Public Documentation

|

|

||||||

|

|

||||||

Cost to complete - £5,000 (Viya Only)

|

|

||||||

|

|

||||||

|

|

||||||

## SASjs Server (Foundation SAS)

|

|

||||||

|

|

||||||

[SASjs Server](https://github.com/sasjs/server) already provides an API interface over Foundation SAS. An example of building a web app using SASjs Server can be found [here](https://www.youtube.com/watch?v=F23j0R2RxSA). In order for it to fulfill the role as the engine behind the Data Controller API, additional work is needed - specifically:

|

|

||||||

|

|

||||||

* Secure (Enterprise) Authentication

|

|

||||||

* Users & Groups

|

|

||||||

* Feature configuration (ability to restrict features to different groups)

|

|

||||||

|

|

||||||

On top of this, the DC API part would cover:

|

|

||||||

|

|

||||||

* Authorisation interface

|

|

||||||

* Creation of API services

|

|

||||||

* Tests & Automated Deployments

|

|

||||||

* Developer docs

|

|

||||||

* Swagger API

|

|

||||||

* Public Documentation

|

|

||||||

|

|

||||||

Cost to complete - £10,000 (fixed)

|

|

||||||

|

|

||||||

Given that all three SAS platforms have Foundation SAS available, this option will work everywhere. The only restriction is that the sasjs/server instance **must** be located on the same server as SAS. `

|

|

||||||

|

|

||||||

|

|

||||||

## SAS 9 API (SAS EBI)

|

|

||||||

|

|

||||||

This product has one major benefit - there is nothing to install on the SAS Platform itself. It connects to SAS in much the same way as Enterprise Guide (using the SAS IOM).

|

|

||||||

|

|

||||||

Website: [https://sas9api.io](https://sas9api.io)

|

|

||||||

|

|

||||||

Github: [https://github.com/analytium/sas9api](https://github.com/analytium/sas9api)

|

|

||||||

|

|

||||||

The downside is that the features needed by Data Controller are not present in the API. Furthermore, the tool is not under active development. To build out the necessary functionality, it will require us to source a senior Java developer on a short term contract to first, understand the tool, and secondly, to update it in a sustainable way.

|

|

||||||

|

|

||||||

We estimate the cost to build Data Controller API on this mechanism at £20,000 - but it could be higher.

|

|

||||||

@ -1,99 +0,0 @@

|

|||||||

---

|

|

||||||

layout: article

|

|

||||||

title: Column Level Security

|

|

||||||

description: Column Level Security prevents end users from viewing or editing specific columns in SAS according to their group membership.

|

|

||||||

og_image: https://docs.datacontroller.io/img/cls_table.png

|

|

||||||

---

|

|

||||||

|

|

||||||

# Column Level Security

|

|

||||||

|

|

||||||

Column level security is implemented by mapping _allowed_ columns to a list of SAS groups. In VIEW mode, only allowed columns are visible. In EDIT mode, allowed columns are _editable_ - the remaining columns are read-only.

|

|

||||||

|

|

||||||

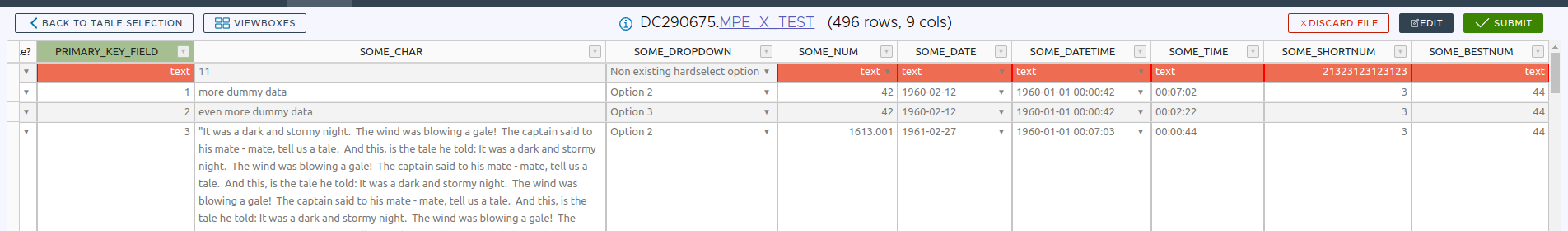

Below is an example of an EDIT table with only one column enabled for editing:

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

See also: [Row Level Security](/row-level-security/).

|

|

||||||

|

|

||||||

## Configuration

|

|

||||||

|

|

||||||

The variables in MPE_COLUMN_LEVEL_SECURITY should be configured as follows:

|

|

||||||

|

|

||||||

### CLS_SCOPE

|

|

||||||

Determines whether the rule applies to the VIEW page, the EDIT page, or ALL pages. The impact of the rule varies as follows:

|

|

||||||

|

|

||||||

#### VIEW Scope

|

|

||||||

|

|

||||||

When `CLS_SCOPE in ('VIEW','ALL')` then only the listed columns are _visible_ (unless `CLS_HIDE=1`)

|

|

||||||

|

|

||||||

#### EDIT Scope

|

|

||||||

|

|

||||||

When `CLS_SCOPE in ('EDIT','ALL')` then only the listed columns are _editable_ (the remaining columns are read-only, and visible). Furthermore:

|

|

||||||

|

|

||||||

* The user will be unable to ADD or DELETE records.

|

|

||||||

* Primary Key values are always read only

|

|

||||||

* Primary Key values cannot be hidden (`CLS_HIDE=1` will have no effect)

|

|

||||||

|

|

||||||

|

|

||||||

### CLS_GROUP

|

|

||||||

The SAS Group to which the rule applies. The user could also be a member of a [DC group](/dcc-groups).

|

|

||||||

|

|

||||||

- If a user is in ANY of the groups, the columns will be restricted.

|

|

||||||

- If a user is in NONE of the groups, no restrictions apply (all columns available).

|

|

||||||

- If a user is in MULTIPLE groups, they will see all allowed columns across all groups.

|

|

||||||

- If a user is in the [Data Controller Admin Group](/dcc-groups/#data-controller-admin-group), CLS rules DO NOT APPLY.

|

|

||||||

|

|

||||||

### CLS_LIBREF

|

|

||||||

The library of the target table against which the security rule will be applied

|

|

||||||

|

|

||||||

### CLS_TABLE

|

|

||||||

The target table against which the security rule will be applied

|

|

||||||

|

|

||||||

### CLS_VARIABLE_NM

|

|

||||||

This is the name of the variable against which the security rule will be applied. Note that

|

|

||||||

|

|

||||||

### CLS_ACTIVE

|

|

||||||

If you would like this rule to be applied, be sure this value is set to 1.

|

|

||||||

|

|

||||||

### CLS_HIDE

|

|

||||||

This variable can be set to `1` to _hide_ specific variables, which allows greater control over the EDIT screen in particular. CLS_SCOPE behaviour is impacted as follows:

|

|

||||||

|

|

||||||

* `ALL` - the variable will not be visible in either VIEW or EDIT.

|

|

||||||

* `EDIT` - the variable will not be visible. **Cannot be applied to a primary key column**.

|

|

||||||

* `VIEW` - the variable will not be visible. Can be applied to a primary key column. Simply omitting the row, or setting CLS_ACTIVE to 0, would result in the same behaviour.

|

|

||||||

|

|

||||||

It is possible that a variable can have multiple values for CLS_HIDE, eg if a user is in multiple groups, or if different rules apply for different scopes. In this case, if the user is any group where this variable is NOT hidden, then it will be displayed.

|

|

||||||

|

|

||||||

|

|

||||||

## Example Config

|

|

||||||

Example values as follows:

|

|

||||||

|

|

||||||

|CLS_SCOPE:$4|CLS_GROUP:$64|CLS_LIBREF:$8| CLS_TABLE:$32|CLS_VARIABLE_NM:$32|CLS_ACTIVE:8.|CLS_HIDE:8.|

|

|

||||||

|---|---|---|---|---|---|---|

|

|

||||||

|EDIT|Group 1|MYLIB|MYDS|VAR_1|1||

|

|

||||||

|ALL|Group 1|MYLIB|MYDS|VAR_2|1||

|

|

||||||

|ALL|Group 2|MYLIB|MYDS|VAR_3|1||

|

|

||||||

|VIEW|Group 1|MYLIB|MYDS|VAR_4|1||

|

|

||||||

|EDIT|Group 1|MYLIB|MYDS|VAR_5|1|1|

|

|

||||||

|

|

||||||

|

|

||||||

If a user is in Group 1, and viewing `MYLIB.MYDS` in EDIT mode, **all** columns will be visible but only the following columns will be editable:

|

|

||||||

|

|

||||||

* VAR_1

|

|

||||||

* VAR_2

|

|

||||||

|

|

||||||

The user will be unable to add or delete rows.

|

|

||||||

|

|

||||||

If the user is in both Group 1 AND Group 2, viewing `MYLIB.MYDS` in VIEW mode, **only** the following columns will be visible:

|

|

||||||

|

|

||||||

* VAR_2

|

|

||||||

* VAR_3

|

|

||||||

* VAR_4

|

|

||||||

|

|

||||||

## Video Example

|

|

||||||

|

|

||||||

This short video does a walkthrough of applying Column Level Security from end to end.

|

|

||||||

|

|

||||||

|

|

||||||

<iframe width="560" height="315" src="https://www.youtube.com/embed/jAVt-omtjVc" title="YouTube video player" frameborder="0" allow="accelerometer; autoplay; clipboard-write; encrypted-media; gyroscope; picture-in-picture" allowfullscreen></iframe>

|

|

||||||

|

|

||||||

@ -1,46 +0,0 @@

|

|||||||

# Data Controller for SAS®: Overview

|

|

||||||

|

|

||||||

## What does the Data Controller do?

|

|

||||||

|

|

||||||

The Data Controller allows users to add, modify, and delete data. All changes are staged and approved before being applied to the target table. The review process, as well as using generic and repeatable code to perform updates, works to ensure data integrity.

|

|

||||||

|

|

||||||

## What is a Target Table?

|

|

||||||

A Target Table is a physical table, such as a SAS dataset or a Table in a database. The attributes of this table (eg Primary Key, loadtype, library, SCD variables etc) will have been predefined by your administrator so that you can change the data in that table safely and easily.

|

|

||||||

|

|

||||||

## How does it work?

|

|

||||||

|

|

||||||

From the Editor tab, a user selects a library and table for editing. Data can then be edited directly, or a uploaded from a file. After submitting the change, the data is loaded to a secure staging area, and the approvers are notified. The approver (which may also be the editor, depending on configuration) reviews the changes and accepts / or rejects them. If accepted, the changes are applied to the target table by the system account, and the history of that change is recorded.

|

|

||||||

|

|

||||||

## Who is it for?

|

|

||||||

|

|

||||||

There are 5 roles identified for users of the Data Controller:

|

|

||||||

|

|

||||||

1. *Viewer*. A viewer uses the Data Controller as a means to explore data without risk of locking datasets. By using the Data Controller to view data, it also becomes possible to 'link' to data (eg copy the url to share a table with a colleague).

|

|

||||||

2. *Editor*. An editor makes changes to data in a table (add, modify, delete) and submits those changes to the approver(s) for acceptance.

|

|

||||||

3. *Approver*. An approver accepts / rejects proposed changes to data under their control. If accepted, the change is applied to the target table.

|

|

||||||

4. *Auditor*. An auditor has the ability to review the [history](dc-userguide.md#history) of changes to a particular table.

|

|

||||||

5. *Administrator*. An administrator has the ability to add new [tables](dcc-tables.md) to the Data Controller, and to configure the security settings (at metadata group level) as required.

|

|

||||||

|

|

||||||

## What is a submission?

|

|

||||||

|

|

||||||

The submission is the data that has been staged for approval. Note - submissions are never applied automatically! They must always be approved by 1 or more approvers first. The process of submission varies according to the type of submit.

|

|

||||||

|

|

||||||

### Web Submission

|

|

||||||

When using the Web editor, a frontend check is made against the subset of data that was filtered for editing to see which rows are new / modified / marked deleted. Only those changed rows (from the extract) are submitted to the staging area.

|

|

||||||

|

|

||||||

### Excel Submission

|

|

||||||

When importing an excel file, all rows are loaded into the web page. You have an option to edit those records. If you edit them, the original excel is discarded, and only changed rows are submitted (it becomes a web submission). If you hit SUBMIT immediately, then ALL rows are staged, and a copy of the excel file is uploaded for audit purposes.

|

|

||||||

|

|

||||||

### CSV submission

|

|

||||||

A CSV upload bypasses the part where the records are loaded into the web page, and ALL rows are sent to the staging area directly. This makes it suitable for larger uploads.

|

|

||||||

|

|

||||||

## Edit Stage Approve Workflow

|

|

||||||

Up to 500 rows can be edited (in the web editor) at one time. These edits are submitted to a staging area. After one or more approvals (acceptances) the changes are applied to the source table.

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

## Use Case Diagram

|

|

||||||

|

|

||||||

There are five roles (Viewer, Editor, Approver, Auditor, Administrator) which correspond to 5 primary use cases (View Table, Edit Table, Approve Change, View Change History, Configure Table)

|

|

||||||

|

|

||||||

<img src="/img/dcu-usecase.svg" height="350" style="border:3px solid black" >

|

|

||||||

@ -1,70 +0,0 @@

|

|||||||

# Data Controller for SAS: User Guide

|

|

||||||

|

|

||||||

## Interface

|

|

||||||

|

|

||||||

The Data Controller has 5 tabs, as follows:

|

|

||||||

|

|

||||||

* *[Viewer](#viewer)*. This tab lets users view any table to which they have been granted access in metadata. They can also download the data as csv, excel, or as a SAS program (datalines). Primary key fields are coloured green.

|

|

||||||

* *[Editor](#editor)*. This tab enables users to add, modify or delete data. This can be done directly in the browser, or by uploading a CSV file. Values can also be copy-pasted from a spreadsheet. Once changes are ready, they can be submitted, with a corresponding reason.

|

|

||||||

* *[Submitted](#submitted)*. This shows and editor the outstanding changes that have been submitted for approval (but have not yet been approved or rejected).

|

|

||||||

* *[Approvals](#approvals)*. This shows an approver all their outstanding approval requests.

|

|

||||||

* *[History](#history)*. This shows an auditor, or other interested party, what changes have been submitted for each table.

|

|

||||||

|

|

||||||

### Viewer

|

|

||||||

|

|

||||||

#### Overview

|

|

||||||

The viewer screen provides users with a raw view of underlying data. It is only possible to view tables that have been registered in metadata.

|

|

||||||

Advantages of using the viewer (over client tools) for browsing data include:

|

|

||||||

|

|

||||||

* Ability to provide links to tables / filtered views of tables (just copy url)

|

|

||||||

* In the case of SAS datasets, prevent file locks from ocurring

|

|

||||||

* Ability to quickly download a CSV / Excel / SAS Cards program for that table

|

|

||||||

|

|

||||||

#### Usage

|

|

||||||

Choose a library, then a table, and click view to see the first 5000 rows.

|

|

||||||

A filter option is provided should you wish to view a different section of rows.

|

|

||||||

|

|

||||||

The Download button gives three options for obtaining the current view of data:

|

|

||||||

|

|

||||||

1) CSV. This provides a comma delimited file.

|

|

||||||

|

|

||||||

2) Excel. This provides a tab delimited file.

|

|

||||||

|

|

||||||

3) SAS. This provides a SAS program with data as datalines, so that the data can be rebuilt as a SAS table.

|

|

||||||

|

|

||||||

Note - if the table is registered in Data Controller as being TXTEMPORAL (SCD2) then the download option will prefilter for the _current_ records and removes the valid from / valid to variables. This makes the CSV a suitable format for subsequent DC file upload, if desired.

|

|

||||||

|

|

||||||

### Editor

|

|

||||||

|

|

||||||

The Editor screen lets users who have been pre-authorised (via the `DATACTRL.MPE_SECURITY` table) to edit a particular table. A user selects a particular library, and table and then has 3 options:

|

|

||||||

|

|

||||||

1 - *Filter*. The user can filter before proceeding to perform edits.

|

|

||||||

|

|

||||||

2 - *Upload*. If you have a lot of data, you can [upload it directly](files). The changes are then approved in the usual way.

|

|

||||||

|

|

||||||

3 - *Edit*. This is the main interface, data is displayed in tabular format. The first column is always "Delete?", as this allows you to mark rows for deletion. Note that removing a row from display does not mark it for deletion! It simply means that this row is not part of the changeset being submitted.

|

|

||||||

The next set of columns are the Primary Key, and are shaded grey. If the table has a surrogate / retained key, then it is the Business Key that is shown here (the RK field is calculated / updated at the backend). For SCD2 type tables, the 'validity' fields are not shown. It is assumed that the user is always working with the current version of the data, and the view is filtered as such.

|

|

||||||

After this, remaining columns are shown. Dates / datetime fields have appropriate datepickers. Other fields may also have dropdowns to ensure entry of standard values, these can be configured in the `DATACTRL.MPE_SELECTBOX` table.

|

|

||||||

|

|

||||||

New rows can be added using the right click context menu, or the 'Add Row' button. The data can also be sorted by clicking on the column headers.

|

|

||||||

|

|

||||||

When ready to submit, hit the SUBMIT button and enter a reason for the change. The owners of the data are now alerted (so long as their email addresses are in metadata) with a link to the approve screen.

|

|

||||||

If you are also an approver you can approve this change yourself.

|

|

||||||

|

|

||||||

#### Special Missings

|

|

||||||

|

|

||||||

Data Controller supports special missing numerics, ie - a single letter or underscore. These should be submitted _without_ the leading period. The letters are not case sensitive.

|

|

||||||

|

|

||||||

#### BiTemporal Tables

|

|

||||||

|

|

||||||

The Data Controller only permits BiTemporal data uploads at a single point in time - so for convenience, when viewing data in the edit screen, only the most recent records are displayed. To edit earlier records, either use file upload, or apply a filter.

|

|

||||||

|

|

||||||

### Submitted

|

|

||||||

This page shows a list of the changes you have submitted (that are not yet approved).

|

|

||||||

|

|

||||||

### Approvals

|

|

||||||

This shows the list of changes that have been submitted to you (or your groups) for approval.

|

|

||||||

|

|

||||||

### History

|

|

||||||

View the list of changes to each table, who made the change, when, etc.

|

|

||||||

|

|

||||||

@ -1,49 +0,0 @@

|

|||||||

# Data Controller for SAS® - Dates & Datetimes

|

|

||||||

|

|

||||||

## Overview

|

|

||||||

|

|

||||||

Dates & datetimes are stored as plain numerics in regular SAS tables. In order for the Data Controller to recognise these values as dates / datetimes a format must be applied.

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

Supported date formats:

|

|

||||||

|

|

||||||

* DATE.

|

|

||||||

* DDMMYY.

|

|

||||||

* MMDDYY.

|

|

||||||

* YYMMDD.

|

|

||||||

* E8601DA.

|

|

||||||

* B8601DA.

|

|

||||||

* NLDATE.

|

|

||||||

|

|

||||||

Supported datetime formats:

|

|

||||||

|

|

||||||

* DATETIME.

|

|

||||||

* NLDATM.

|

|

||||||

|

|

||||||

Supported time formats:

|

|

||||||

|

|

||||||

* TIME.

|

|

||||||

* HHMM.

|

|

||||||

|

|

||||||

In SAS 9, this format must also be present / updated in the metadata view of the (physical) table to be displayed properly. This can be done using DI Studio, or by running the following (template) code:

|

|

||||||

|

|

||||||

```sas

|

|

||||||

proc metalib;

|

|

||||||

omr (library="Your Library");

|

|

||||||

folder="/Shared Data/table storage location";

|

|

||||||

update_rule=(delete);

|

|

||||||

run;

|

|

||||||

```

|

|

||||||

|

|

||||||

!!! note

|

|

||||||

Data Controller does not support decimals when EDITING. For datetimes, this means that values must be rounded to 1 second (milliseconds are not supported).

|

|

||||||

|

|

||||||

If you have other dates / datetimes / times you would like us to support, do [get in touch](https://datacontroller.io/contact)!

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

@ -1,24 +0,0 @@

|

|||||||

---

|

|

||||||

layout: article

|

|

||||||

title: Groups

|

|

||||||

description: By default, Data Controller will work with the SAS Groups defined in Viya, Metadata, or SASjs Server. It is also possible to define custom groups with Data Controller itself.

|

|

||||||

og_image: https://i.imgur.com/drGQBBV.png

|

|

||||||

---

|

|

||||||

|

|

||||||

# Adding Groups

|

|

||||||

|

|

||||||

## Overview

|

|

||||||

By default, Data Controller will work with the SAS Groups defined in Viya, Metadata, or SASjs Server. It is also possible to define custom groups with Data Controller itself - to do this simply add the user and group name (and optionally, a group description) in the `DATACTRL.MPE_GROUPS` table.

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

## Data Controller Admin Group

|

|

||||||

|

|

||||||

When configuring Data Controller for the first time, a group is designated as the 'admin' group. This group has unrestricted access to Data Controller. To change this group, modify the `%let dc_admin_group=` entry in the settings program, located as follows:

|

|

||||||

|

|

||||||

* **SAS Viya:** $(appLoc)/services/settings.sas

|

|

||||||

* **SAS 9:** $(appLoc)/services/public/Data_Controller_Settings

|

|

||||||

* **SASjs Server:** $(appLoc)/services/public/settings.sas

|

|

||||||

|

|

||||||

|

|

||||||

To prevent others from changing this group, ensure the Data Controller appLoc (deployment folder) is write-protected - eg RM (metadata) or using Viya Authorisation rules.

|

|

||||||

@ -1,69 +0,0 @@

|

|||||||

---

|

|

||||||

layout: article

|

|

||||||

title: DC Options

|

|

||||||

description: Options in Data Controller are set in the MPE_CONFIG table and apply to all users.

|

|

||||||

og_title: Data Controller for SAS® Options

|

|

||||||

og_image: /img/mpe_config.png

|

|

||||||

---

|

|

||||||

# Data Controller for SAS® - Options

|

|

||||||

|

|

||||||

The [MPE_CONFIG](/tables/mpe_config/) table provides a number of system options, which apply to all users. The table may be re-purposed for other applications, so long as scopes beginning with "DC_" are avoided.

|

|

||||||

|

|

||||||

Currently used scopes include:

|

|

||||||

|

|

||||||

* DC

|

|

||||||

* DC_CATALOG

|

|

||||||

|

|

||||||

## DC Scope

|

|

||||||

|

|

||||||

### DC_EMAIL_ALERTS

|

|

||||||

Set to YES or NO to enable email alerts. This requires email options to be preconfigured (mail server etc).

|

|

||||||

|

|

||||||

### DC_MAXOBS_WEBEDIT

|

|

||||||

By default, a maximum of 100 observations can be edited in the browser at one time. This number can be increased, but note that the following factors will impact performance:

|

|

||||||

|

|

||||||

* Number of configured [Validations](/dcc-validations)

|

|

||||||

* Browser type and version (works best in Chrome)

|

|

||||||

* Number (and size) of columns

|

|

||||||

* Speed of client machine (laptop/desktop)

|

|

||||||

|

|

||||||

### DC_REQUEST_LOGS

|

|

||||||